Join Us on Apr 30: Unveiling Parasoft C/C++test CT for Continuous Testing & Compliance Excellence | Register Now

Jump to Section

Overcoming the Challenges of Microservices Testing & Maximizing the Benefits

Microservices play a huge role in successful software releases. Here is coverage of microservices best practices for companies.

Jump to Section

Jump to Section

Microservices have been an industry trend for years now, yet organizations are failing to reap the benefits of the approach and struggling with failed releases. These failures often boil down to the difficulty in testing the interfaces between services to get the quality, security, and performance that’s expected.

In the end, it’s a failure to test these APIs in a robust enough manner. The silver lining is that the testing concepts and solutions between legacy SOA testing and microservices testing are the same. If you can solve your API testing issues, you can improve your microservice releases.

3 Key Steps for Effective Microservices Testing — An API World Presentation

Microservices Introduce New Challenges

These days it’s commonplace to see hundreds if not thousands of microservices grouped together to define a modern architecture.

Highly distributed enterprise systems have big and complex deployments, and this complexity in the deployment is often seen as a reason NOT to implement microservices. In fact, the complexity within the monolith you’re decomposing is just breaking apart into an even more complex deployment environment.

To the disappointment of the uninitiated, complexity isn’t really going away and it’s instead morphing into a new kind of complexity.

Fitch Delivers High Code Coverage & Quality for Microservices Applications

While microservices promise to increase parallel development efficiency, they introduce their own new set of challenges. Here are some examples.

- Many more interactions to test at the API layer, whereas before API testing was limited to just testing externally exposed endpoints.

- Parallel development roadblocks that limit the time-to-market benefits of breaking up the monolith. Dependencies on other services and complex deployment environments reduce the parallelism in reality.

- Impacts to the traditional methods of testing that were successful within the monolith (such as end-to-end UI testing) now must shift to the API layer.

- More potential points of failure due to distributed data and computing, which makes troubleshooting and root cause analysis difficult and complex.

3 Key Steps to Microservices Testing

What are the three key steps to microservices testing? To describe them simply, these steps are:

- Record

- Monitor

- Control

Recording, monitoring, and control are going to help you implement testing approaches that efficiently discover what tests to create while simultaneously helping you automate component tests when there are downstream APIs you need to isolate from.

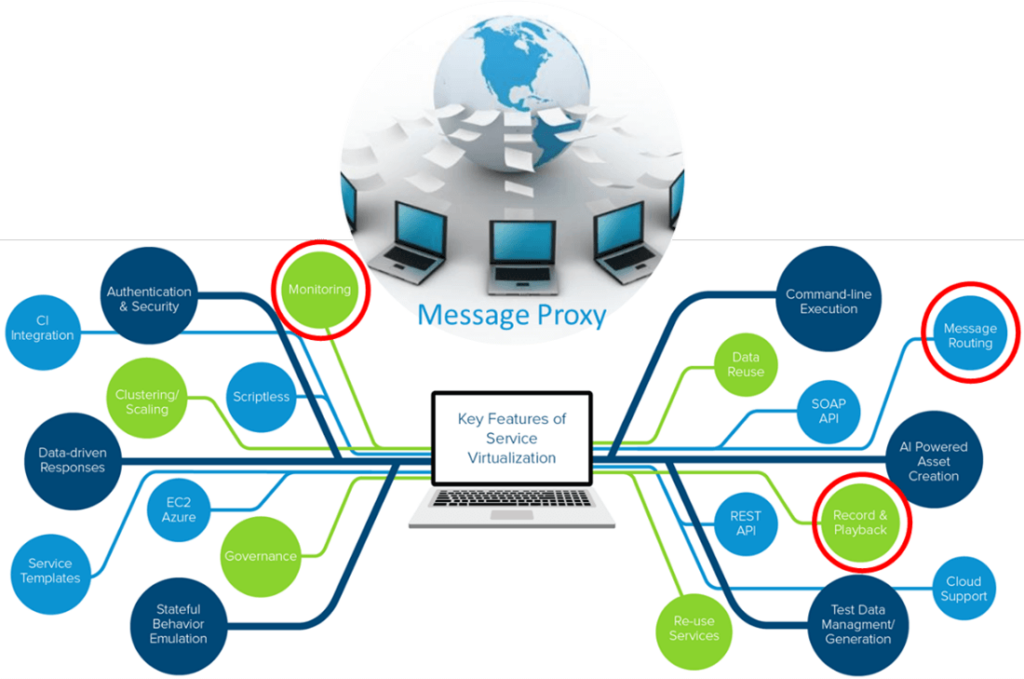

The enabling technology to do this is service virtualization. Service virtualization brings all 3 of these concepts to life through one fundamental feature: message proxies.

Using message proxies in your deployment environment lets you monitor and record the message flows between APIs, as well as control the destinations where messages are sent.

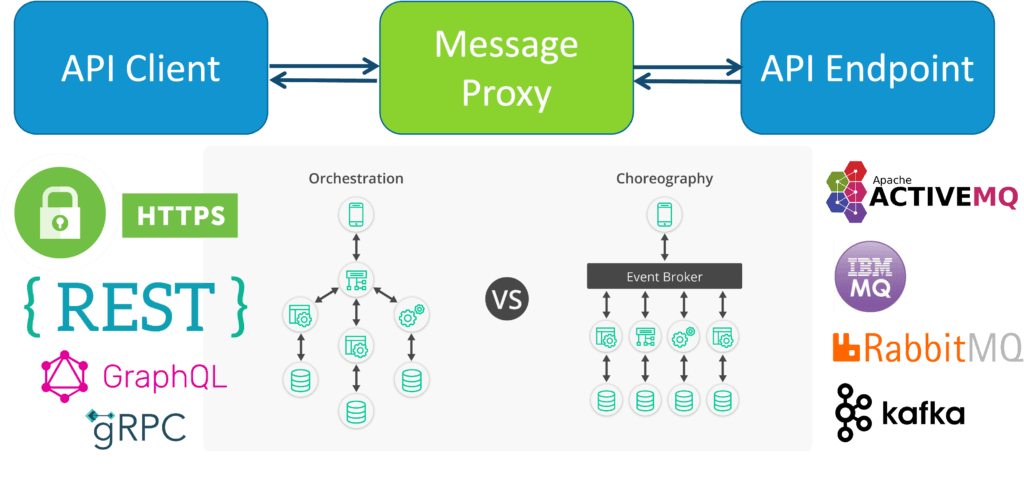

How Does a Message Proxy Work?

It is designed to listen to a given endpoint, or queue, and then forward messages it receives to a destination endpoint or queue. Basically, it’s a man-in-the-middle for your APIs. You actively choose to inject one between your integrated systems. Once it’s in place, you’re ready to start taking advantage of it.

API and Microservices Testing

Testing APIs and overcoming the challenges they represent is not that different whether your environment is highly distributed, somewhat distributed, or mostly monolithic. The greater the number of integrations that exist, the more acute some of the challenges will be, but the fundamental approaches are the same.

When thinking about an API or microservice as a black box, we can break it down to the clients of your service and the dependencies of your service. Testing your API as a black box means your test harness is acting as a client to your service whose job is to verify it receives the correct responses back. The dependencies are what your service needs to integrate with to function properly. There are optimizations to be made on both the client side and among the dependencies.

Before exploring these optimizations, let’s begin at the design phase, where the microservice is planned, and where the testing strategy should begin.

The Microservice Life Cycle

Design Phase: Define Requirements for Clarity

A well-accepted API development best practice is to implement a service definition during the design phase. For RESTful services, the OpenAPI specification is commonly used.

These service definitions are used by your clients to understand what resources and operations your service supports and how your service expects to receive and send data. Within the service definitions for REST services will be JSON schemas that describe the structure of the message bodies, so client applications know how to work with your API.

But service definitions aren’t just important for helping other teams understand how your API works, they also have positive impacts on your testing strategy.

You can think of the service definition like a contract. That’s the basis to start building a strategy for API governance. One of the biggest challenges in API testing, just like any software testing, is dealing with change.

With the popularity of Agile practices, change has never been more rapid. Enforcing these contracts is the first step in bringing order to chaos when you’re trying to wrangle many teams that are building APIs and then expecting all these APIs to somehow play nice with each other.

Validating & Enforcing Contracts

How does one go about enforcing a contract?

As part of any automated regression suite, you should have checks for whether the service definition your team has written has any mistakes in it. Swagger and OpenAPI have schemas of their own that define how the service definition must be written. You can use them to automate these checks early in the API development life cycle.

Then, besides verifying the contract itself, you want to check that the responses your service returns also conform to the contract. Your API testing framework should have support built in to catch instances where your API is returning a response that deviates from its service definition’s schema.

Think of it like this. A car is made up of thousands of individual parts that all need to fit perfectly.

If the team responsible for the power unit delivers an engine that deviates from the design spec, it’s likely you’re going to have big problems when you try to hook up the transmission that a different team built because they were referencing the engine’s design to know where the bolts need to line up.

That’s what you’re checking for here. These are the kinds of integration problems that good API governance can help you avoid. Designing by and conforming to these contracts should be one of the first concerns of your API testing practice.

Service definition contracts can also help your testing process be more resilient to change. The time it takes to refactor test cases for the changes in an API could have a huge impact on testing such that it gets pushed outside of the sprint.

This is both a quality and security risk. It also means unscheduled time for extra testing. When using an API testing framework, it needs to help teams bulk-refactor existing test cases as an API’s design changes so they can keep up with the fast pace of Agile and in-sprint testing. Service contracts and the right tooling can make this much less painful.

Implementation Phase: Apply Best Practices

Microservices development doesn’t imply a free pass to skip unit testing. Code is code and unit testing is a fundamental quality practice. It helps teams catch regressions quickly, early, and in a manner that’s easy for developers to remediate—no matter the kind of software.

The ugly truth is that software teams with nonexistent or reactive unit testing practices tend to have poor quality outcomes. The overhead for unit testing is considered too much and therefore many managers and leaders don’t prioritize it.

This is unfortunate because the market for tools has matured where a developer’s life is much easier and more productive while unit testing and the tradeoff between cost and quality is much less than it used to be. Unit testing forms the foundation of a solid testing practice and shouldn’t be shortchanged.

In addition, software quality practices for developers have matured with dedicated coding standards for API development. In 2019, the international nonprofit, OWASP, released the OWASP Top 10 API Security standard.

Coding standards like this help microservices teams avoid common security and reliability anti-patterns that could introduce business risk to their projects. Trying to adopt a coding standard without tools is nearly impossible.

Luckily, modern static analysis tools, also known as static application security testing (SAST) tools, are staying current with industry standards and will have support for this standard. Developers can scan their code as they’re writing it and as a part of their continuous integration process to make sure nothing gets missed.

Component Testing Phase: Use Proxies for API Dependencies

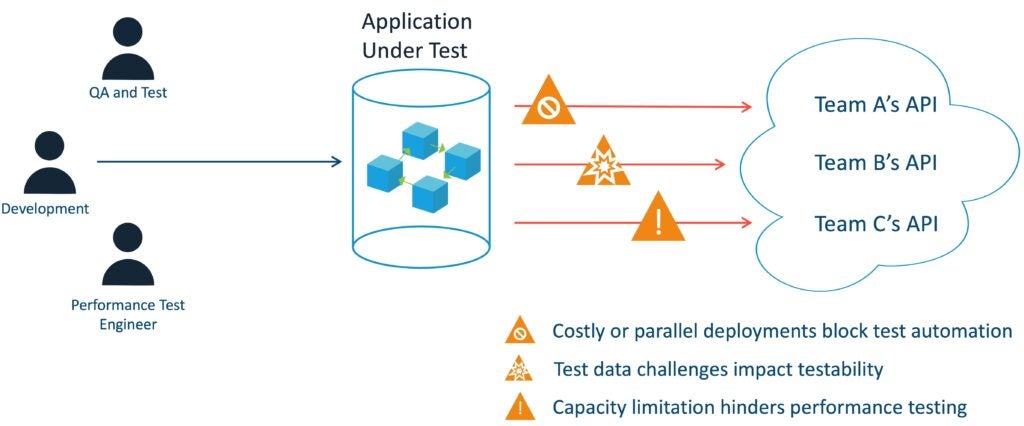

Component testing implies testing your microservice in isolation. Achieving true isolation has challenges like knowing what to do about your microservice’s dependencies. Furthermore, one of the most difficult things to anticipate as a microservice developer is understanding exactly how your API is going to be used by other systems.

Client applications to your API are likely to find creative uses of your microservices that were never considered. This is both a business blessing and an engineering curse, and exactly why it’s important to expend energy to understand the use cases for your API.

API governance during the design phase is an important first step, but even with well-defined contracts and automated schema validation of your microservice’s responses, you’ll never be able to fully predict how the needs of the end-to-end product are going to evolve, and how that will impact the microservices within your domain.

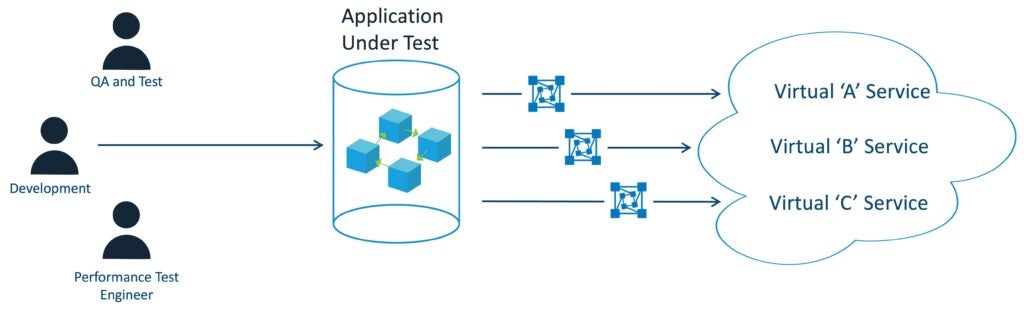

Recording, monitoring, and control are going to help you implement testing approaches that efficiently discover what tests are needed while simultaneously helping you automate component tests when there are downstream APIs you need to isolate from. The enabling technology to do this is service virtualization.

Service virtualization brings all three of these concepts to life through one fundamental feature: message proxies. Using message proxies lets you monitor and record the message flows between APIs, as well as control the destinations where messages are sent.

Orchestrated vs Choreographed Services

Orchestrated and choreographed (or reactive) services are fancy terms that describe synchronous or asynchronous messaging patterns.

If your service is communicating using a message broker with protocols like AMQP, Kafka, or JMS, then you’re testing a choreographed or reactive service.

If you’re testing a REST or GraphQL interface, that’s an orchestrated, or synchronous, service.

For the purposes of this post, it won’t really matter what protocol and message exchange pattern you’re contending with. However, you may find it is more difficult to apply these principles with asynchronous messaging if your API testing framework doesn’t have support for the protocols your organization has chosen to adopt.

Capture Client Usage Scenarios

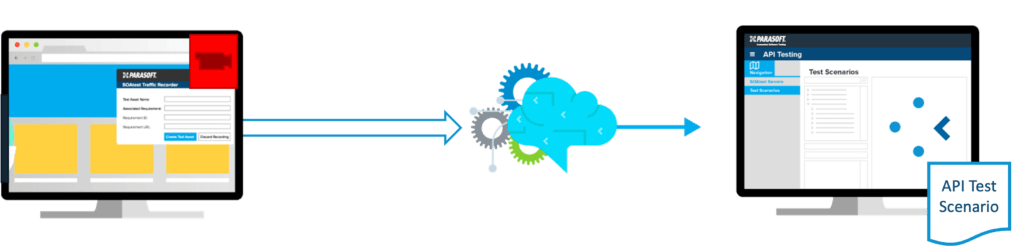

It’s difficult for a microservice team to predict how other teams will use their API. We know end-to-end, fully integrated testing is expensive and slow. Using the message proxying capabilities found in service virtualization tools lets you record traffic coming from upstream client applications so that you can capture realistic usage scenarios that significantly improve your knowledge of what tests should be run within your CI/CD pipeline without requiring those client applications to always be present to trigger that traffic.

In other words, the recording enables you to replay these integration scenarios for automated regression testing that’s a lot simpler and easier to manage than asking clients to run their tests, which transitively test your API. This is why tools that combine service virtualization with API testing are so popular. They make it easy to record this API traffic and then leverage it for API scenario testing that’s brought under your control.

Make Dependencies Manageable

Testing your service quickly becomes difficult because of its reliance on other APIs in a test environment, which brings issues with availability, realistic test data, and capacity.

This can push the testing effort out of the sprint and make it difficult for teams to detect integration issues early enough that they have time to deal with them. This is the traditional use case for service virtualization, where the technology allows for simulating or mocking the responses of downstream APIs (in so doing, creating a virtual version of the service) providing isolation so that you may fully test your API earlier and easier with your CI/CD pipeline.

When message proxies are deployed in an environment, teams can record API traffic and then build virtual services that faithfully and realistically respond to the microservice (including stateful transactions where the simulated dependency must properly handle PUT, POST, and DELETE operations) without having the real dependency available.

Stateful service virtualization is an important feature to create the most realistic virtual service that covers all of your testing use cases.

Integrated Testing Phase: Control the Test Environment

Let’s now zoom out the testing scope a bit further to integration testing. At this stage, sometimes referred to as the system-integration-test or SIT stage, the testing environment is a production-like environment that ensures there aren’t any missed defects.

You should expect message proxies to provide control over whether you want an isolated or real-world connection to dependencies. Another aspect of control is making sure the message proxies can easily be managed via API. Organizations with high maturity in their CI/CD processes are automating deploy and destroy workflows where the programmatic control of the message proxy is a must-have requirement.

What optimizations can you extract from the visibility—or observability—that the message proxies expose when you’re in the integration testing phase?

This is where monitoring capabilities are essential for a service virtualization solution that’s supporting automated testing. The monitoring from message proxies will expose the inner workings of these complex workflows so that you can implement a better test that tells you where the problem lies, not just that a problem exists.

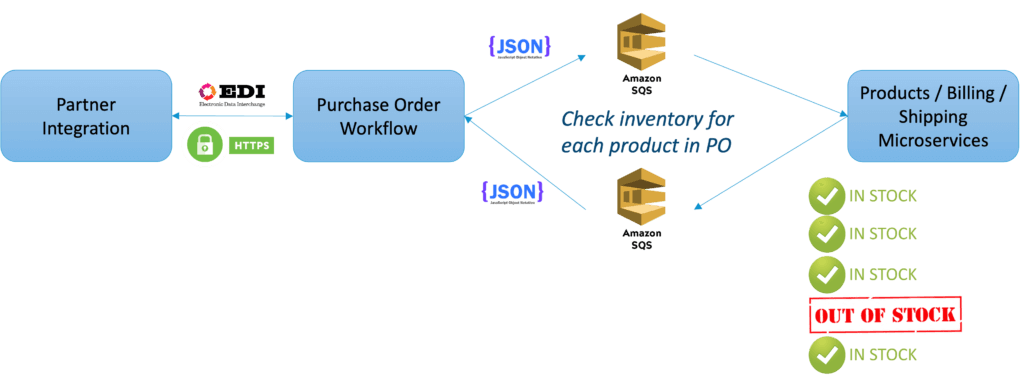

Consider, for example, an order processing system that needs to check multiple downstream services like inventory, billing, and shipping systems to fulfill an order. For a given test input, you can assert against certain behind-the-scenes behavior that helps developers pinpoint why a test is failing. When your team spends less time figuring out why a problem occurred, they have more time to actually fix it.

Five Tips for Testing Microservices

Here are five tips to help you develop a microservices testing strategy. Bear in mind that these are suggestions. Like all types of testing plans, you need to consider your setup’s specifics.

- Look at each service as one software module. Perform unit tests on a service like you would on any new code. In a microservices architecture, every service is considered a black box. Therefore, test each similarly.

- Determine the fundamental links in the architecture and test them. For example, if a solid link exists between the login service, the frontend that displays the user’s details, and the database for the details, test these links.

- Don’t just test happy path scenarios. Microservices can fail and it’s important to simulate failure scenarios to build resilience in your system.

- Do your best to test across stages. Experience has proven that testers who use a diverse mix of testing practices, starting in development and progressing through larger testing scopes, not only increase the chance that bugs will reveal themselves, but they do so efficiently. This is particularly true in complicated virtual environments where small differences exist between various libraries and where underlying hardware architecture may produce unforeseen, undesirable results in spite of the visualization layer.

- Use “canary testing” on new code and test on real users. Make sure all of the code is well-instrumented. And also use all the monitoring your platform provider offers. This meets “shift-left” testing with “shift-right” testing because you are also testing “in the wild.”

Demystifying Microservices

Microservices are here to stay. Unfortunately, organizations are failing to reap the benefits of the approach. It boils down to the difficulty in testing the interfaces between distributed systems (that is, the APIs) to get the quality, security, and performance that’s expected.

What’s needed is an approach to microservices testing that enables the discovery, creation, and automation of component tests. The enabling technologies are message proxies and service virtualization that are tightly integrated with a feature-rich API testing framework.

Message proxies enable recording of API traffic, monitoring to discover scenarios and use cases, and control to manage and automate suites of API tests. Coupled with service virtualization, automated microservice testing becomes an achievable reality.