Use Agentic AI to generate smarter API tests. In minutes. Learn how >>

Jump to Section

Verification vs Validation in Embedded Software

Verification vs validation in embedded software is a hot topic in the software development space. While both terms portray similar meanings, there are noteworthy differences. Check out this post to learn them.

Jump to Section

Jump to Section

How Test Automation Accelerates Both Verification & Validation

Ensuring the correct operation, quality, safety, and security in embedded software is a substantial portion of the software activities for embedded systems. In large part, teams accomplish this through software testing and throughout development via analysis, traceability, documentation, and so on. Safety-critical software has rigorous approaches to verification and validation that are often codified in industry standards. It begs the question: what’s the difference?

What’s the Difference Between Verification & Validation?

The official definitions of validation and verification are defined in the IEEE Standard Glossary of Software Engineering Terminology.

Verification: The process of determining whether or not the products of a given phase of the software development cycle fulfill the requirements established during the previous phase.

Validation: The process of evaluating software at the end of the software development process to ensure compliance with software requirements.

Barry Boehm wrote in Verifying and Validating Software Requirements and Design Specifications more succinct definitions:

Verification: “Am I building the product right?”

Validation: “Am I building the right product?”

These definitions get to the crux of the difference between these two key aspects of testing.

The Goal of Verification & Validation

The ultimate goal is to build the right product. More than that, it’s to ensure the product has the quality, safety, and security to ensure it remains the right product.

Verification is part of the software development process that ensures the work is correct. Software verification usually includes:

- Conformance to industry standards, ensuring process and artifacts meet the guidelines.

- Reviews, walkthroughs, inspections.

- Static code analysis and other activities on artifacts produced during development.

- Enforcing architecture, design, and coding standards

Validation is demonstrating that the end product meets the requirements. Those requirements encompass functionality plus reliability, performance, safety, and security.

For physical products, validation includes customers seeing, trying, and testing the product themselves. But for software, validation consists of the execution of the software and demonstration of it running. It typically involves:

- Code execution to demonstrate correct functionality.

- Execution in target environments.

- Stress, performance, penetration, and other non-functional testing.

- Acceptance testing to customers directly and often.

- Using artifacts from verification processes to illustrate traceability of requirements to end functionality, especially for specific safety and security functions.

It’s important to understand the differences between verification and validation goals, and that software development needs both. These activities are a major part of the effort that goes into software development. Organizations are always looking to streamline them without compromising safety, security, or quality.

How to Approach Verification & Validation

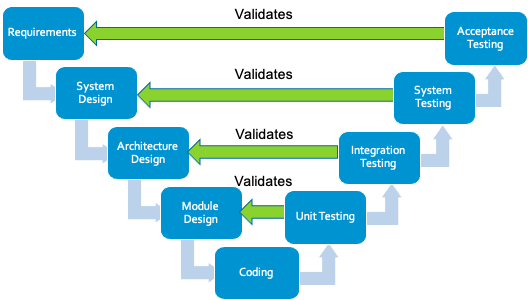

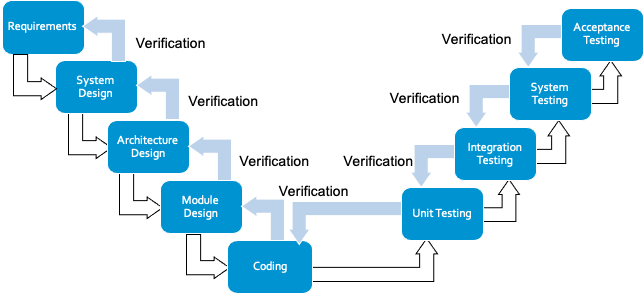

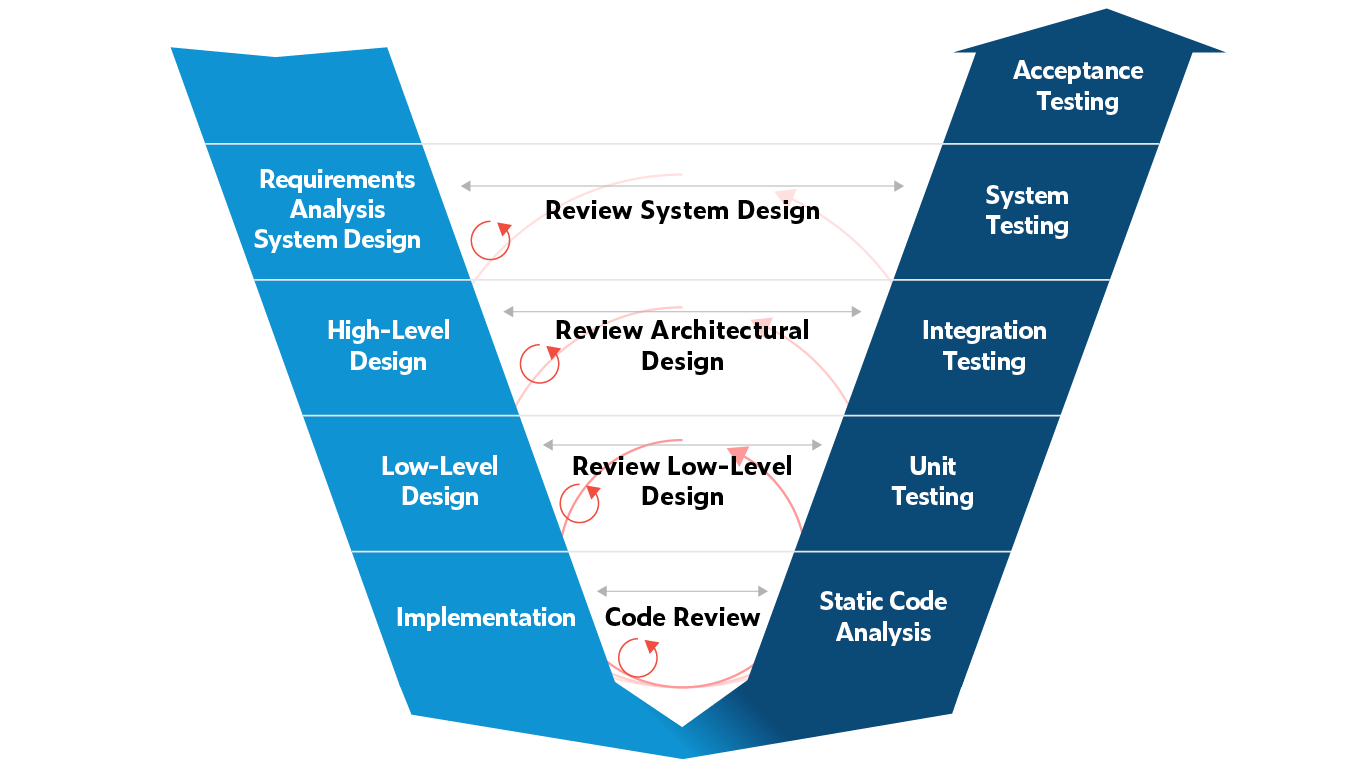

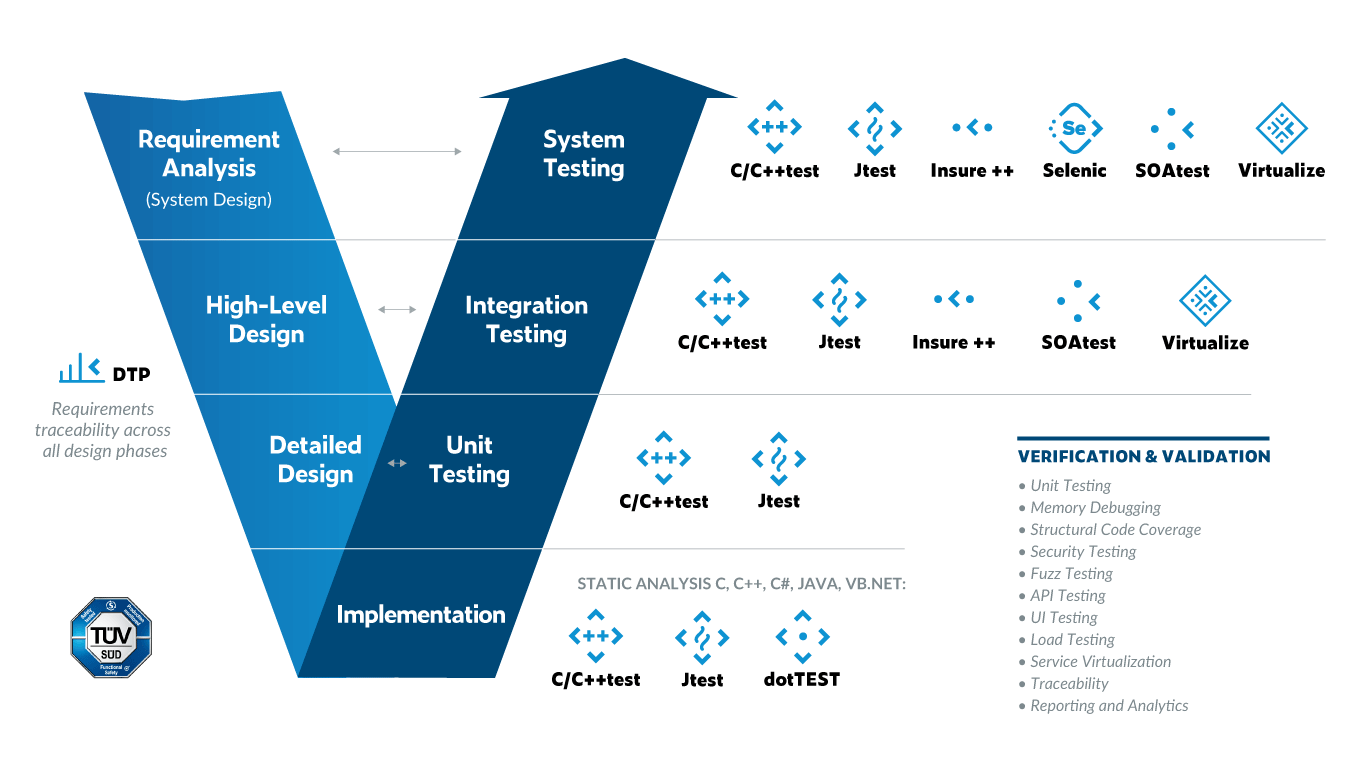

The V-model shows the approach for more formal verification and validation, which developing safety-critical software uses. It illustrates the activities at each stage of development and the relationships between them.

The V-model is good for illustrating the relationship between the stages of development and stages of validation. At each testing stage, more complete portions of the software are validated against the phase that defines it. However, the V-model might imply a waterfall development method. There are ways to incorporate Agile, DevOps, and CI/CD into this type of product development.

Verification, on the other hand, ensures that each stage is complete and done properly:

Verification involves reviews, walkthroughs, analysis, traceability, test and code coverage, and other activities to make sure teams are building the process and the product correctly. As an example, the execution of unit tests is a validation activity, and ensuring traceability, code coverage, and testing progress of the unit tests is verification. The key role of verification is to ensure building delivered artifacts from the previous stage to specification and in compliance with company and industry guidelines.

Verification and Validation Naming Confusion

Organizations are likely to use verification and validation in slightly different ways. It’s common practice to consider validation an activity that happens near the end of software development where teams present the product to the customer (or testers acting as their proxies) and demonstrate meeting all the requirements. Embedded development still uses the concept of a factory acceptance test (FAT).

Another notable difference in philosophy is DO-178B/C and related standards for the development of safety-critical avionics software for which validation isn’t a known term. In fact, validation is considered redundant if verification is complete.

“Validation is the process of determining that the software requirements are correct and complete. DO-178C does not provide guidance for software validation testing because it is reasoned that software that is verified to be correct should, in theory, have no validation problems during software integration testing, unless the software requirements are incomplete or incorrect.“ – Certification of Safety-Critical Software Under DO-178C and DO-278A, Stephen A. Jacklin, NASA Ames Research Center

Although I think Boehm’s definition corresponds to the more common usage of the terms, there are likely people who might consider unit and software integration testing as verification steps rather than validation.

Hybrid DevOps Pipelines for Safety-Critical Software

In many embedded software organizations, the implementation of a fully Agile process isn’t compatible with the restrictions that industry safety and security standards place on them. Artifacts, code, test results, and documentation often have required and set delivery dates. Progress is based on these deliverables (milestones).

In some cases, such as large military and defense projects, milestone deliverables are built into the contract and payment arrangements. Although this implies a waterfall approach, there’s no reason to limit software development to it. Teams can use hybrid models to achieve milestones for deliverables using iterative and Agile methods internally.

The reason to bring this up in a discussion of verification and validation is that teams can use many of the benefits of continuous integration and testing on complex safety-critical applications. Part of that is shifting left verification and validation to as early in the development process as possible. For example, there’s no reason to delay unit testing until all the units are coded or to wait to analyze code with static analysis until it is ready for integration.

Similarly, developers should attempt integration testing as soon as there are unit tested components ready. Combining automation with a continuous, iterative approach provides huge benefits for software validation and verification. Parasoft has tools that address testing, quality, and security at all phases of the SDLC as shown below.

The V-model shows the approach for more formal verification and validation, which developing safety-critical software uses.

Accelerating Verification

Verification involves the work to ensure each phase of development is fulfilling the specification of the previous step. In terms of software coding and testing, verification is making sure that the code satisfies the module design and, ultimately, the high-level design and requirements above that.

Furthermore, verification ensures meeting project-level requirements. Such requirements include compliance with industry standards, risk management, traceability, and metrics (code coverage and compliance). Parasoft’s software test automation tools accelerate verification by automating the many tedious aspects of record keeping, documentation, reporting, analysis, and reporting:

- Use static analysis as early as possible to ensure quality and security as developers write code. Further, static analysis prevents future bugs and vulnerabilities, reducing the downstream impact of bugs missed during inspection and testing.

- Automating coding standards compliance to reduce manual effort and accelerate code inspections.

- Two-way traceability for all artifacts to make sure requirements have code and tests to prove they are being fulfilled. Metrics, test results, and static analysis results are traced to components and vice versa.

- Code and test coverage to make sure all requirements are implemented and to make sure the implementation is tested as required.

- Reporting and analytics to help decision making and keep track of progress. Decision making needs to be based on data collected from the automated processes.

- Automated documentation generation from analytics and test results to support process and standards compliance.

- Standards compliance automation to reduce the overhead and complexity by automating the most repetitive and tedious processes. And, tools can keep track of the project history and relating results against requirements, software components, tests, and recorded deviations.

Automate Essential Testing to Verify & Validate Polarion Requirements

Accelerating Validation

Validation is proving a product meets its requirements where the execution of code is necessary either in isolation for unit tests or in various stages of integration. Automating these suites of tests is a huge time saver for embedded software development.

Validation requires execution on target hardware. Optimizing regression testing makes the best use of available resources, people, and hardware. Parasoft test automation tools accelerate the validation process by reducing the dependence on manual testing—maintaining traceability and code coverage from all results:

- Automation of all test suites minimizes manual testing and reduces the testing bottleneck from limited hardware availability.

- Target and host-based test execution supports different validation techniques as required.

- Shift-left testing starts as soon as teams develop code. It leverages unit testing frameworks and automatically generates harnesses to test as soon as code is ready. Support for test-driven development and continuous testing is available as an organization’s process matures.

- Manage change with smart test execution to focus on tests only for code that changed and any impacted dependents.

- Two-way traceability among code, tests, static analysis results, and requirements and support for company-wide application lifecycle management (ALM) tools.

Summary

Software validation ensures that teams build the right software to meet customer and marketplace requirements. Validation is proof that you meet requirements and that your product is reliable, safe, and secure to protect your customers.

Software verification ensures that teams build the product in accordance with your organization’s own processes and standards and those required by the marketplace. In other words, validation proves the product works while verification ensures that you cross all the t’s and dot all the i’s.

Validation and verification consume a large part of the resources in embedded product development. Parasoft’s software test automation suite provides a unified set of tools to accelerate testing by helping teams shift testing left to the early stages of development while maintaining traceability, test result record keeping, code coverage details, report generation, and compliance documentation.