We're an Embedded Award 2026 Tools nominee and would love your support! Vote for C/C++test CT >>

Jump to Section

Parasoft Blog

Explore the four core capabilities that separate meaningful AI-powered API testing from surface-level automation.

Jump to Section

AI is rapidly becoming a standard feature in API test automation tools. Nearly every vendor now claims to be "AI-powered," promising faster test creation, broader coverage, and accelerated delivery. But as adoption grows, engineering and QA leaders face a more nuanced and more important question:

What should AI actually do to make API testing meaningfully better, faster, and more reliable?

Not all AI-enhanced API testing tools are created equal—and for senior QA and engineering leaders, this distinction matters.

Many tools apply AI to a single, highly visible moment in the lifecycle, most often focused on test creation. That can look impressive in a demo, but at scale it often creates new bottlenecks instead of eliminating them. Faster test creation only translates to higher release velocity when feedback is fast, failures are actionable, and environments are reliable.

As automated suites grow, teams discover the hard truth: AI that optimizes one phase while ignoring the rest of the system is not a solution—it’s technical debt with better marketing.

Evaluating AI-powered API testing tools requires looking beyond individual features and asking a more strategic question: does this tool improve the entire testing system, from creation to execution speed to failure triage to environment stability, or does it simply move the bottleneck downstream?

Historically, teams evaluated API testing tools based on protocol support, scripting flexibility, CI/CD integration, reporting, and ease of use. Those criteria worked when API testing was primarily about validating individual services and catching functional regressions.

But modern delivery environments have changed what it takes to move fast with confidence.

Today’s systems are deeply interconnected and distributed. Automated test suites grow continuously as architectures scale. Regression cycles strain under increasing execution times. AI-driven application features introduce nondeterministic behavior that traditional assertions can’t reliably validate. And test environments—often shared, unstable, or partially available—frequently become the bottleneck that slows everything down.

In this reality, evaluating AI-powered API testing capabilities in isolation from the full testing cycle no longer works.

A tool that accelerates test creation but doesn’t shorten feedback loops will slow-release velocity. A tool that generates more tests without intelligent execution and failure triage increases noise instead of confidence. A tool that ignores environment reliability leaves teams blocked when they should be shipping.

This is why AI must be evaluated not as a feature, but as part of a complete testing system, one that spans test creation, execution optimization, failure triage, and environment control. The capabilities that matter most are the ones that improve release velocity and confidence at the same time.

Let’s take a look at those four core capabilities.

Automated test generation is one of the most visible uses of AI in API testing. Many tools can generate tests from API specifications, traffic recordings, or observed behavior—dramatically reducing the effort to get started.

But generating tests for a single API or endpoint is only the beginning. Modern applications require AI-generated tests that go beyond individual services.

Teams should expect solutions that not only generate the test case itself, but also:

The AI should support end-to-end, cross-service tests generation, even when APIs are distributed across multiple services and documented in numerous individual service definition files.

As agentic AI workflows are embedded into applications—recommendation engines, natural language responses, scoring systems—API responses become nondeterministic. Exact value matching no longer works.

For example, your LLM-based functionality might produce any one of the following responses:

They’re all correct, but writing assertions to handle that variety can be brittle and downright impossible with traditional validation tools.

AI-powered testing tools must support flexible validation strategies such as ranges and thresholds, probabilistic or tolerance-based assertions, and semantic or intent-based validation designed to handle nondeterministic outputs.

As more applications integrate agentic AI features, teams need API testing solutions that can keep up. The answer? Leveraging AI to execute natural-language assertions that intelligently validate nondeterministic responses.

AI-driven test generation can sometimes lead to an unintended side effect: regression suite sprawl and pipeline bloat.

As test creation becomes easier and accelerates, test suites grow larger. As a result:

When time is short, teams are often forced into difficult tradeoffs. They may need to push regression testing until late in the cycle or make educated assumptions about which tests to run based on recent changes. These tradeoffs can lead to one of the following:

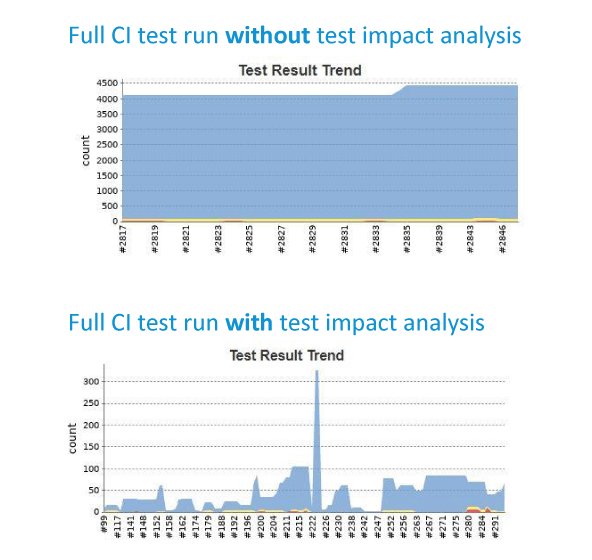

This is where intelligent test impact analysis (TIA) becomes essential.

Instead of running every test for every change, TIA analyzes what code changed using code coverage data and determines which tests are relevant. This shrinks the scope of regression testing, intelligently focusing execution only on the test cases needed to validate the application’s changes. Teams get faster feedback without sacrificing confidence.

AI can also classify test failures, distinguishing between:

By filtering out irrelevant failures, teams spend less time investigating false alarms and can triage and remediate issues faster. This minimizes time-to-triage and prevents noisy or irrelevant failures from impeding development velocity.

As regression suites grow with AI-driven testing, teams need API testing solutions that go beyond generating more tests. Test impact analysis and AI-powered failure classification keep regression focused, reduce noise, and accelerate remediation, delivering fast, reliable feedback and high confidence in every release.

Modern applications are rarely monolithic. Microservices, third-party APIs, and distributed architectures are now the norm—and API testing solutions must be designed for that reality. Effective API testing isn’t just validating individual endpoints. It requires validating how services work together across real business workflows.

In practice, dependencies are often unavailable, unstable, costly to access, or owned by other teams.

Many developers rely on API mocking tools to work around these constraints. But static mocks are often limited and difficult for QA teams to adopt. They rely on fixed responses and can’t model stateful behavior, data dependencies, or real service interactions, making them brittle and insufficient for validating end-to-end workflows in complex distributed environments.

A complete API testing solution should include service virtualization to simulate dependent services and keep testing moving despite imperfect environments. Historically, however, service virtualization has been hard for QA teams to adopt, requiring deep technical expertise to design, configure, and maintain complex virtual services.

AI changes that equation by turning what was once a complex, manual task into a more accessible workflow.

With AI assistance, teams can generate and evolve virtual services using natural language instructions, dramatically reducing setup time and ongoing maintenance effort. This makes service virtualization practical for a broader range of teams, enabling earlier testing, fewer environment-related delays, and more consistent validation across distributed systems.

When evaluating AI-powered API testing tools, teams should look beyond isolated AI features and focus on platform-level capabilities. Generating tests with AI is valuable, but without built-in service virtualization, testing still breaks down when dependencies are unavailable or environments are unstable.

A complete solution combines intelligent test generation with service virtualization to keep testing continuous, realistic, and scalable—ensuring AI accelerates delivery rather than introducing new bottlenecks.

As AI plays a larger role in API testing decisions, from generating tests and assertions to optimizing execution and classifying failures, trust becomes critical.

For enterprise teams operating in regulated and safety-critical industries such as finance, healthcare, aerospace, and defense, AI must enhance productivity without sacrificing governance, traceability, or control.

A trustworthy, production-ready, AI-powered API testing solution should ensure AI behavior is:

Black-box automation that can’t be inspected or governed introduces unacceptable risk, particularly in environments with strict regulatory, security, or audit requirements.

Questions to ask when evaluating tools:

Ultimately, controllability is what separates experimental AI features from production-ready API testing platforms. Trustworthy, transparent AI accelerates adoption and enables teams to scale testing with confidence, ensuring AI strengthens software quality without compromising governance or risk management.

As AI becomes ubiquitous, the real differentiation shifts from whether a tool uses AI to how it applies intelligence in a practical, enterprise-ready way.

Use the following questions as a framework for evaluating AI-powered API testing solutions.

Affirmative answers to these questions indicate AI that delivers real, measurable testing value to help teams accelerate delivery and maintain confidence—not automation theater or superficial AI features.

Learn how to choose AI-powered API testing tools and what capabilities really matter.