Use Agentic AI to generate smarter API tests. In minutes. Learn how >>

Improving Test Execution Efficiency With Test Impact Analysis

Every code change carries risk, but what if developers could get rapid feedback to know whether their changes are safe? Discover how test impact analysis increases test execution efficiency.

Every code change carries risk, but what if developers could get rapid feedback to know whether their changes are safe? Discover how test impact analysis increases test execution efficiency.

In software development, change is inevitable. More than that, it keeps software systems relevant, efficient, and resilient. As technology advances at a relentless pace and user demands grow increasingly complex, the ability to adapt and evolve software is paramount.

Test impact analysis (TIA) increases test execution efficiency while ensuring code modifications don’t introduce unintended defects. TIA pinpoints the tests that need to be run and validates code changes quickly. As a result, teams can confidently make swift changes.

Test impact analysis isn’t a novel concept. However, its prominence has grown substantially in recent years. In the past, software testing primarily focused on static, pre-release assessments aimed at finding and addressing defects. This approach often falls short when dealing with the dynamic nature of modern software, where change is constant.

In other words, the rise of Agile and DevOps methodologies, rapid development cycles, and continuous integration practices have all reinforced the need for a more adaptable, proactive, and on-the-go testing strategy.

With test impact analysis, there’s a fundamental shift in how we validate software, especially for large and complex systems. Instead of waiting for long test runs to complete and possibly delaying the work, this approach assesses the impact of changes on existing code, ensuring that each modification is rigorously tested to maintain overall system stability.

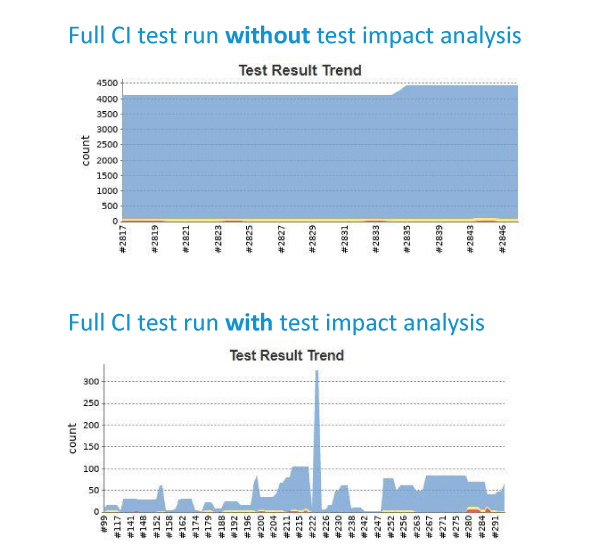

Test impact analysis is a software testing methodology that revolves around assessing and verifying the impact of specific changes made to a software application during its development or maintenance. Instead of running the entire test suite with every commit, TIA automatically and intelligently selects only the subset of test cases that correlate to those recent changes, optimizing build times and reducing resource consumption.

In a continuous integration (CI) pipeline, TIA typically works as follows:

By integrating TIA into CI workflows, teams minimize unnecessary test execution, optimize infrastructure costs, and accelerate development cycles while ensuring that every code change is properly validated.

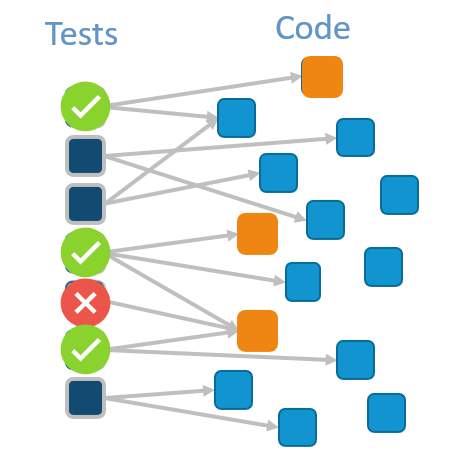

Graphic showing code modifications being correlated to impacted test cases. This correlation becomes an automated process with Parasoft’s TIA.

While test impact analysis in CI/CD ensures confidence in continuous builds, live unit testing applies the same concepts within the IDE, building developer confidence earlier by providing rapid feedback as code is modified.

As developers make changes to existing code and save their work within the IDE, live unit testing automatically runs in the background. It identifies the subset of unit tests relevant to the recent changes and executes them in real time.

Developers can see test results as they code, catching potential failures immediately. They can refine their changes before pushing new code to a shared branch. This automated workflow has its benefits:

By integrating live unit testing into the development workflow and complementing it with TIA, teams create a seamless quality assurance strategy that efficiently validates changes locally as code is being modified in CI/CD pipelines.

Implementing test impact analysis and live unit testing offers numerous benefits for software development and quality assurance processes.

Implementing test impact analysis and live unit testing is a fundamental step toward achieving better software development. The continuous evolution crisscrossing the software industry demands more efficient and adaptable testing methodologies. These testing techniques provide a fresh perspective by emphasizing adaptability. The shift from rigid, static testing plans to a dynamic, more responsive approach enables teams to keep pace with the ever-changing software landscape and address emerging challenges proactively.

Test impact analysis and live unit testing eliminate the uncertainty of software changes. Developers no longer have to suffer through long wait times to find out whether their modifications will break something. Instead, get rapid, targeted feedback.

By integrating these techniques into development workflows, teams can move faster, innovate freely, and make every code change with confidence. From a user perspective, TIA also helps deliver software solutions that are responsive to meeting their evolving needs.

To succeed in implementing test impact analysis and live unit testing, organizations should invest in the right tools and foster a culture of continuous learning. By doing so, they can create a development and testing environment that thrives on change.

See how your teams can get faster feedback on application changes using Parasoft’s Test Impact Analysis.