Use Agentic AI to generate smarter API tests. In minutes. Learn how >>

Mastering Java Application Performance Testing With Parasoft

Just a single line of code could wreak havoc on your entire software performance if not detected and corrected on time. Check out this post to learn how to monitor Java threads, and understand the specific lines of code in your application that could cause potential bugs in your app performance.

Jump to Section

Just a single line of code could wreak havoc on your entire software performance if not detected and corrected on time. Check out this post to learn how to monitor Java threads, and understand the specific lines of code in your application that could cause potential bugs in your app performance.

Thread-related issues can adversely affect the performance of a Web API application in ways that are often hard to diagnose and tricky to resolve. Keeping a clear picture of a thread’s behavior is essential to achieving optimal performance.

In this post, I’ll show you how to use Parasoft SOAtest‘s Load Test JVM Threads Monitor to view the threading activity of a JVM with graphs of vital statistics and configurable thread dumps that can point to the lines of code responsible for performance loss caused by inefficient thread use. Parasoft SOAtest’s performance testing tools enable you to convert any functional tests into load and performance tests.

Server applications, like web servers, are built to process multiple client requests simultaneously. The number of client requests simultaneously processed by a server is usually called "load." An increasing load can cause slow response times, application errors, and eventually a crash. Thread-related concurrency and synchronization issues caused by simultaneous request processing can contribute to all these categories of undesired behavior. For this reason, they should be thoroughly tested for and weeded out before the application is deployed in production.

One of the biggest differences that separates performance testing from other types of software tests is its systematic focus on concurrency issues. The multi-threaded mechanism that server applications employ to process multiple client requests simultaneously can make application code run into concurrency-induced errors and inefficiencies that do not occur in other, typically single-threaded types of tests, such as unit or integration tests.

From the perspective of validating application code for thread-related concurrency and synchronization issues, the goal of a performance test is twofold.

Achieving the first of these goals requires putting the application under test (AUT) under a load comparable to that which it will experience in production by applying a stream of simulated client requests with properly configured load concurrency, request intensity, and test duration. To learn more, see the Performance Testing Best Practices Guide.

Achieving the second of these goals is the subject of this blog post.

When it comes to catching thread-related issues and their details, the main questions are how to catch them, what to do with the diagnostic data, and when to look for such issues. Below are the lists of key answers to those questions.

Ask your performance test product vendor how their tool can help you catch and diagnose AUT threading issues.

The seemingly random pattern of some thread-related issues poses additional performance testing challenges in catching and diagnosing rare failures. Fixing a rare one-in-a-million error can become a nightmare for QA and development teams at late the stages of application the life cycle.

To increase the chances of catching such errors, the application under load test should be continuously monitored during all stages of performance testing. Below is a list of main performance test types and why you should use thread monitors as you run them.

See the Performance Testing Best Practices Guide for more details on performance test types and their usage.

As an example of how these practices can be applied, we shall now follow a hypothetical Java development team that runs into a few common threading issues while creating a web API application and diagnose some common thread-related performance issues. After that, we’ll look at more complex examples of the real applications. Note that some suboptimal code in the examples below has been added intentionally for demonstration.

Our hypothetical Java development team embarked on a new project: a REST API banking application. The team set up a continuous integration (CI) infrastructure to support the new project, which includes a periodic CI job with Parasoft SOAtest‘s load testing module to continuously test performance of the new application.

The Bank application code is starting to grow, and the tests are running. However, the team noticed that after implementing a new transfer operation, the Bank application started having sporadic failures under higher load. The failure comes from the account validation method that occasionally finds a negative balance in overdraft protected accounts. The failure in account validation causes an exception and an HTTP 500 response from the API. The developers suspect that this may be caused by a race condition in the IAccount.withdraw method when it is called by different threads processing concurrent transfer operation on the same account:

13: public boolean transfer(IAccount from, IAccount to, int amount) {

14: if (from.withdraw(amount)) {

15: to.deposit(amount);

16: return true;

17: }

18: return false;

19: }The developers decide to synchronize access to accounts inside the transfer operation to prevent the suspected race condition:

14: public boolean transfer(IAccount from, IAccount to, int amount) {

15: synchronized (to) {

16: synchronized (from) {

17: if (from.withdraw(amount)) {

18: to.deposit(amount);

19: return true;

20: }

21: }

22: }

23: return false;

24: }The team also adds the JVM Threads Monitor to the Load Test project that runs against the REST API application. The monitor will provide graphs of deadlocked, blocked, parked, and total threads and will record dumps of threads in these states.

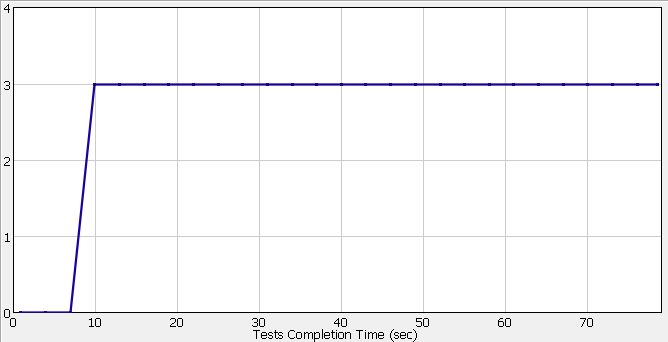

The code change is pushed to the repository and gets picked up by the CI performance testing process. The next day, the developers find that the performance test failed overnight. The Bank application stopped responding shortly after the start of the transfer operation performance test. Inspecting the JVM Threads Monitor graphs in the Load Test report quickly shows that there are deadlocked threads in the Bank application. See Fig 1.a. The deadlock details were saved by the JVM Threads Monitor as a part of the report and show the exact lines of code responsible for the deadlock. See Listing 1.b.

Fig 1.a: Number of deadlocked threads in the application under test (AUT).

DEADLOCKED thread: http-nio-8080-exec-20

com.parasoft.demo.bank.v2.ATM_2.transfer:15

com.parasoft.demo.bank.ATM.transfer:21

…

Blocked by:

DEADLOCKED thread: http-nio-8080-exec-7

com.parasoft.demo.bank.v2.ATM_2.transfer:16

com.parasoft.demo.bank.ATM.transfer:21

com.parasoft.demo.bank.v2.RestController_2.transfer:29

sun.reflect.GeneratedMethodAccessor58.invoke:-1

sun.reflect.DelegatingMethodAccessorImpl.invoke:-1

java.lang.reflect.Method.invoke:-1

org.springframework.web.method.support.InvocableHandlerMethod.doInvoke:209Listing 1.b: Deadlock details saved by JVM Threads monitor.

The Bank application developers decide to resolve the deadlock by synchronizing on a single global object and modifying the transfer method code as follows:

14: public boolean transfer(IAccount from, IAccount to, int amount) {

15: synchronized (Account.class) {

16: if (from.withdraw(amount)) {

17: to.deposit(amount);

18: return true;

19: }

20: }

21: return false;

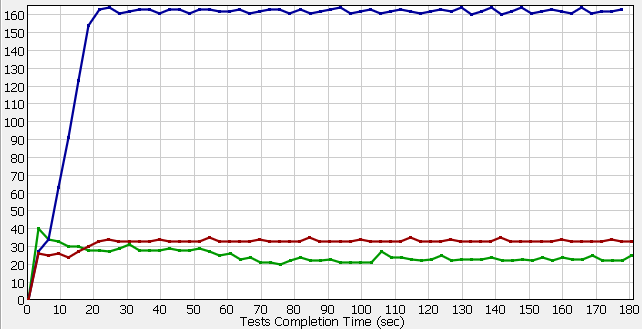

22: }The change resolves the deadlock issue of Version 2 and the race condition of Version 1, but the average transfer operation response time increases more than five-fold from 30 to over 150 milliseconds. See Fig. 2.a. The JVM Threads Monitor BlockedRatio graph shows that from 60 to 75 percent of the application threads are in BLOCKED state during the load test execution. See Fig. 2.b. The details saved by the monitor indicate that application threads are blocked while trying to enter the globally synchronized section on line 15. See Listing 2.c.

BLOCKED thread: http-nio-8080-exec-4

com.parasoft.demo.bank.v3.ATM_3.transfer:15

com.parasoft.demo.bank.ATM.transfer:21

com.parasoft.demo.bank.v3.RestController_3.transfer:29

…

Blocked by:

SLEEPING thread: http-nio-8080-exec-8

java.lang.Thread.sleep:-2

com.parasoft.demo.bank.Account.doWithdraw:64

com.parasoft.demo.bank.Account.withdraw:31Listing 2.c: Blocked thread details saved by the JVM Threads Monitor.

The development team searches for a fix that would resolve the race condition without introducing deadlocks and compromising the responsiveness of the application and after some research finds a promising solution with the use of java.util.concurrent.locks.ReentrantLock class:

19: private boolean doTransfer(Account from, Account to, int amount) {

20: try

21: acquireLocks(from.getReentrantLock(), to.getReentrantLock());

22: if (from.withdraw(amount)) {

23: to.deposit(amount);

24: return true;

25: }

26: return false;

27: } finally {

28: releaseLocks(from.getReentrantLock(), to.getReentrantLock());

29: }

30: } The graphs in Fig. 3a show response times of Bank Application transfer operation of version 4 (optimized locking) in red graph, version 3 (global object synchronization) in blue graph, and version 1 (unsynchronized transfer operation) in green graph. The graphs indicate that the transfer operation performance has improved dramatically as a result of the locking optimization. The slight difference between the synchronized (red graph) and unsynchronized (green graph) transfer operation is an acceptable price for preventing the race conditions.

Fig 3.a: Transfer operation response time of Bank application Version 4 (red), Version 3 (blue) and Version 1 (green).

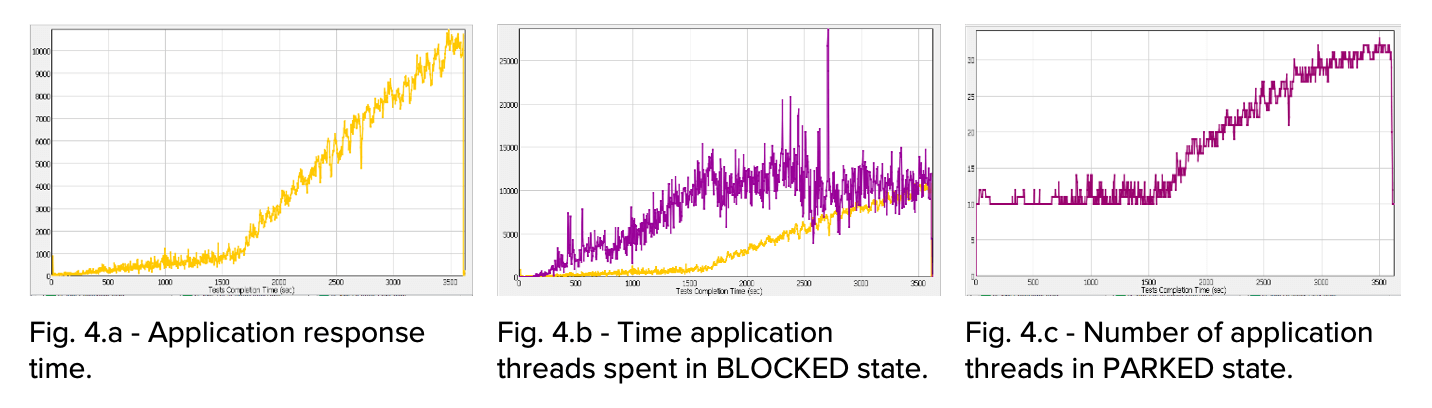

The Bank Application examples above demonstrate how to resolve typical isolated cases of performance degradation caused by threading issues. The real-world cases may be more complicated. The graphs in Fig. 4 show an example of a production REST API application whose response time kept growing as the performance test progressed. The application response time grew at a lower rate for the first half of the test and at a higher rate in the second half. See Fig 4.a.

In the first half of the test, the response time growth correlated with total time application threads spent in the BLOCKED state. See Fig 4.b.

In the second half of the test, the response time growth correlated with the number of application threads in PARKED state. See Fig 4.c.

The stack traces captured by the Load Test JVM Threads Monitor provided the details: one pointed at a synchronized block, which was responsible for excessive time spent in BLOCKED state. The other pointed at the lines of code that used java.util.concurrent.locks classes for synchronization, which was responsible for keeping threads in the PARKED state. After these areas of code were optimized, both performance issues were resolved.

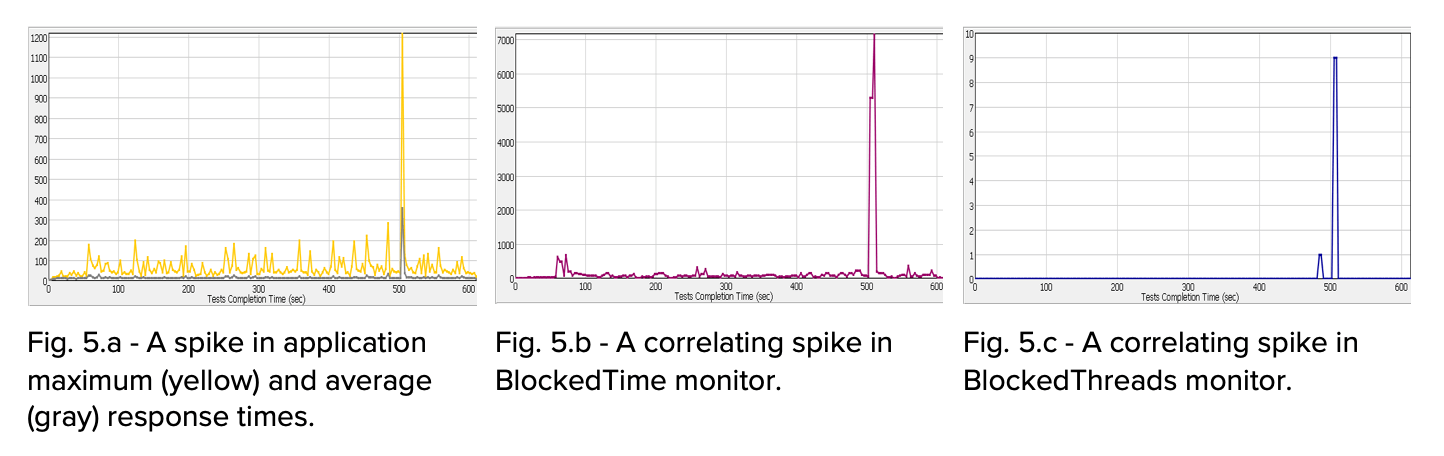

The Load Test JVM Threads Monitor can be very helpful in capturing details of rare thread-related problems, especially if your performance tests are automated and run regularly*. The graphs in Fig. 5 show a production REST API application that had intermittent spikes in average and maximum response times. See Fig. 5.a.

Such spikes in application response time can often be caused by suboptimal JVM garbage collector configuration, but in this instance a correlating spike in the BlockedTime monitor, Fig. 5.b, points to threads synchronization as the source of the problem. The BlockedThreads monitor helps even more here by capturing the stack traces of the blocked and the blocking threads. It is important to understand the difference between the BlockedTime and the BlockedThreads monitors.

The BlockedTime monitor shows accumulated time that JVM threads spent in BLOCKED state between monitor invocations, while the BlockedThreads monitor takes periodic snapshots of JVM threads and searches for blocked threads in those snapshots. For this reason, the BlockedTime monitor is more reliable at detecting threads blockage, but it just alerts you that thread-blocking issues exist.

The BlockedThreads monitor because it takes regular threads snapshots may miss some thread-blocking events, but on the plus side, when it captures such events, it provides detailed information of what causes the blocking. For this reason, whether or not a BlockedThreads monitor will capture the code-related details of a blocked state is a matter of statistics, but if your performance tests run on a regular basis, you will soon get a spike in the BlockedThreads graph (see Fig. 5.c), which means that blocked and blocking thread details have been captured. These details will point you to the lines of code responsible for the rare spikes in the application response time.

The Load Test JVM Threads monitor besides being an effective diagnostic tool can also be used to create performance regression controls for thread-related issues. After you have discovered and fixed such a performance issue, create a performance regression test for it. The test will consist of an existing or a new performance test run and a new regression control. In the case of Parasoft Load Test that would be a QoS Monitor Metric for a relevant JVM Threads Monitor channel. For example, for the issue described in example 1, Fig. 4, create a Load Test QoS Monitor metric that checks for the time that application threads spent in BLOCKED state and another metric that checks the number of threads in PARKED state. It is always a good idea to create named threads in your Java application, this will allow you to apply performance regression controls to a name-filtered set of threads.

Once the JVM Threads monitor regression controls have been created, they can be effectively utilized in automated performance tests. Performance regression controls are an indispensable tool for test automation, which is, in turn, a major element of continuous performance testing inside a DevOps pipeline. Performance regression controls should be created not only in response to past performance regressions but also as a defense against potential issues.

The following table provides a summary of what Threads Monitor channels to use and when:

| Threads Monitor Channel | When to Use |

|---|---|

| DeadlockedThreads | Always. Deadlocks are arguably the most serious of the thread related issues that could break application functionality entirely. |

| MonitorDeadlockedThreads | |

| BlockedThreads | Always. Excessive time spent in BLOCKED state or number of BLOCKED threads will commonly result in loss of performance. Monitor at least one of these parameters. Also use for performance regression controls. |

| BlockedTime | |

| BlockedRatio | |

| BlockedCount | |

| ParkedThreads | Always. Excessive number of threads in PARKED state can indicate improper use of java.util.concurrent.locks classes and other threading issues. Also use for performance regression controls. |

| TotalThreads | Often. Use to compare number of thread in BLOCKED, PARKED or other states to the total number of threads. Also use for performance regression controls. |

| SleepingThreads | Occasionally. Use for performance regression controls related to these states and for exploratory testing. |

| WaitingThreads | |

| WaitingTime | |

| WaitingRatio | |

| WaitingCount | |

| NewThreads | Rarely. Use for performance regression controls related to these thread states. |

| UnknownThreads |

Parasoft’s JVM Threads Monitor is an effective diagnostic tool for detecting thread-related JVM performance issues as well as creating advanced performance regression controls. When combined with SOAtest‘s Load Test Continuum, the JVM Threads Monitor helps eliminate the step to reproduce performance issues by recording relevant thread details that point to the lines of code responsible for poor performance and help you improve both application performance as well as developer and QA productivity.