Use Agentic AI to generate smarter API tests. In minutes. Learn how >>

3 Practical Ways to Future-Proof Your IoT Devices

The Internet of things has continued to evolve, but how do we ensure that they meet security and safety standards? Here are three ways to ensure that your embedded IoT devices meet future safety and compliance standards.

The Internet of things has continued to evolve, but how do we ensure that they meet security and safety standards? Here are three ways to ensure that your embedded IoT devices meet future safety and compliance standards.

Despite the potential for embedded devices in the IoT context, many devices are not currently required to comply with safety or security standards. But in the Agile world of IoT development, compliance requirements can come much later, after the code has been already written and tested. So how can you prepare for the future of your embedded IoT devices?

The term Internet of things (IoT) refers to a system of network-enabled devices, components, or services that publish and/or consume data. IoT applications are becoming an integral part of our life: from industrial robots and surgical instruments to self-driving cars and autonomously flying drones. Many of these devices today can already impact safety, privacy, and security of their users. In some, cases the cost of failure is deadly, so building these devices to the prevailing standards is critical.

While it’s best to start embedding compliance activities into software design from the very beginning, it’s a well-known fact that stringent development processes (especially without the aid of automation) can impact time-to-market. Not many developers enjoy doing additional testing and documenting traceability outside of normal working hours, so pragmatic, agile, and fast-paced teams often cannot afford to lose momentum by building compliance into the schedule on the premise that they "might need it" in the future. Instead, many teams choose to "cross that bridge when they come to it."

Unfortunately, there is no magic wand or silver bullet that retroactively "makes" code compliant. What these organizations are learning the hard way is that the cost of adding compliance at the end of the project is orders of magnitude higher than the cost of developing the initial working product.

So what are some low-impact actions you can take today to prepare for satisfying the stringent compliance requirements of tomorrow?

It’s important to understand where your project stands at the moment. The amount of technical debt is the cost of potential rework due to code complexity combined with any remaining coding standard and security violations that currently exist in the code. This debt is owed to subsequent code cleanup, fixing, and testing. One of the ways to get a good grip on where a project stands today is to use automated static code analysis. Static analysis provides insight into the quality and security of a code base and enumerates coding standard violations, as applicable.

Unfortunately, many teams developing embedded applications in C and C++ are still relying on their compiler or manual code reviews to catch issues, instead of adopting static analysis. Some teams struggle to adopt static analysis tools for a variety of reasons, such as finding them noisy and hard to use (shouldn’t be a problem if you learn how to get started properly), or failing to work it into the daily development process due to urgent everyday matters. A common (mis)perception is that the time spent on determining which violations are worth fixing is greater than the value of the actual fix.

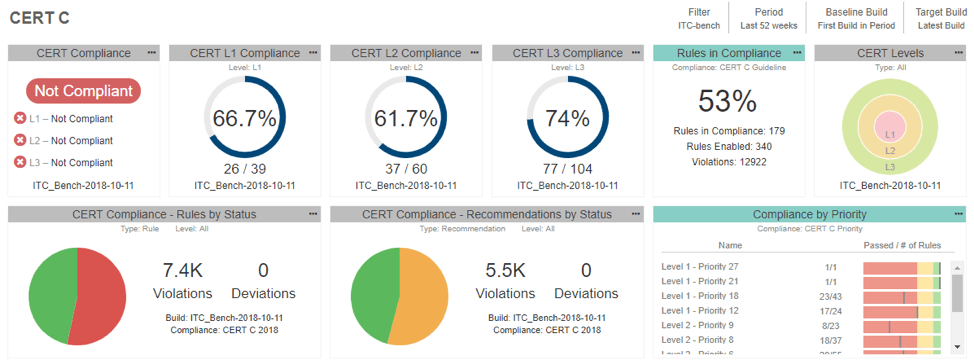

But we find that teams who adopted a small set of critical and mandatory rules spent much less time reworking the code when faced with Functional Safety audits later in the project. It’s much easier to build safe and secure systems from the ground up by Building Security In by implementing, for instance, CERT C Secure Coding Guidelines. You can start small. CERT has a sophisticated prioritization system (using severity, likelihood, and remediation cost, each in 3 grades, 27 levels in total), and if you use Parasoft tools, you can view status of compliance easily in a preconfigured dashboard.

Static analysis also helps organizations understand their technical debt by collecting datapoints that help management with safety and security compliance. Managers can easily assess important questions, such as:

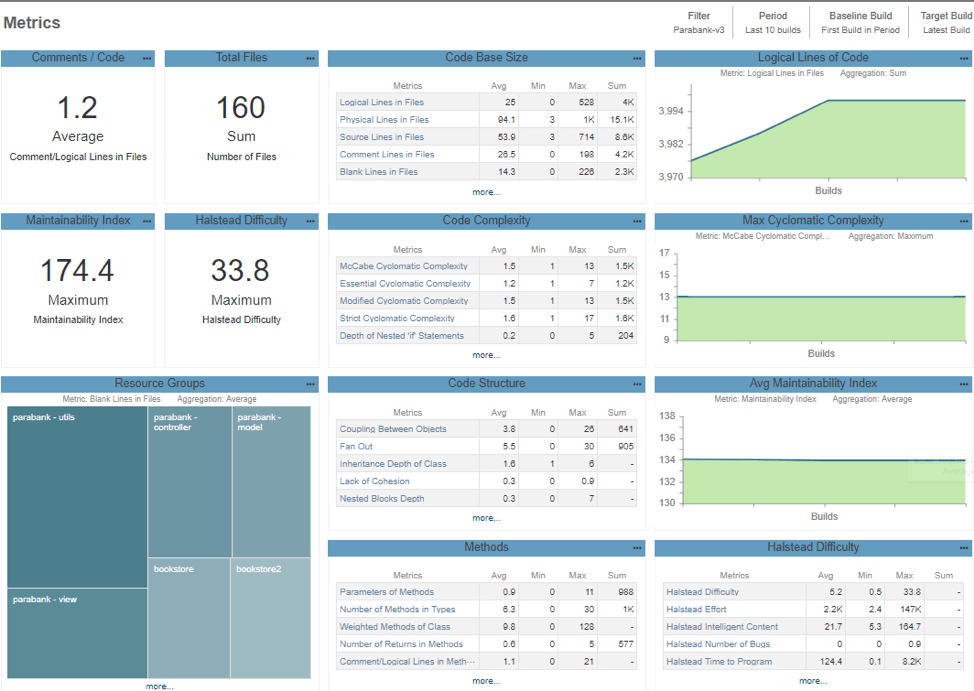

Some standards require measuring cyclomatic complexity, to keep it below a certain threshold. Complexity metrics can also be used to estimate a testing effort — for example, the number of test cases you need to demonstrate 100% branch level coverage to comply with IEC 61508 SIL 2 will be proportional to the McCabe Cyclomatic Complexity of a function.

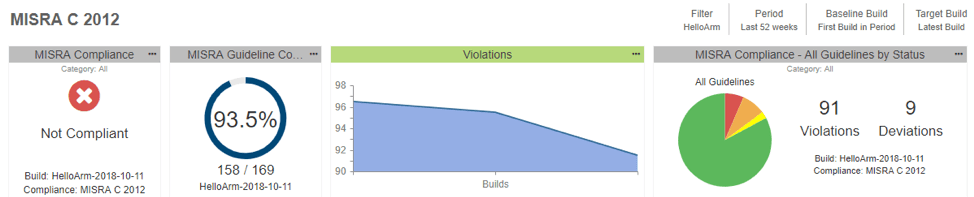

Below is an example from a dashboard showing a project’s MISRA compliance within Parasoft DTP, Parasoft’s reporting and analytics hub:

And here is the same for CERT C:

The value of seeing the code metrics might help expose more complex areas for additional code review and monitor how well those areas are covered by tests. Here is an example of Metrics Dashboard:

So you can start with the basics. Once the team is comfortable with managing the most critical errors, you can increase the breadth of the standards violations. Not all the rules are "set in stone" so it’s important to decide what rules are in or out of the project’s coding standard. At a minimum, adopting the mandatory set of rules in a couple of key coding standards (e.g. MISRA mandatory, or CERT C rules) makes future safety and security argumentation for a connected device easier.

Most pragmatic engineers tend to agree that blindly creating unit tests for all your functions doesn’t provide a good ROI. However, if your team has access to a unit testing framework as part of the project sandbox, it is a valuable investment. Unit testing can be intelligently used when engineers feel they need to test certain complex algorithms or data manipulations in isolation. There is also a significant value in the process of developing unit tests — what we’ve seen from organizations is that the practice of simply writing and executing a unit test makes the code more robust and better designed.

When safety or security compliance requirements arise, an organization can quickly ramp up the unit testing effort by adding staff members temporarily. But to quickly scale that effort, the unit testing framework and process should already be understood and documented over the course of the project. The common characteristics of a scalable unit testing framework with future compliance in mind are:

The key takeaway is to deploy all the testing techniques that a future safety standard requires but in a minimal scale. It is easier for this to scale when and if a certification need appears rather than start from scratch.

Architecting embedded systems requires considering plenty of "ilities": simplicity, portability, maintainability, scalability, and reliability while solving the tradeoffs between latency, throughput, power consumption, and size constraints. When architecting a system that will potentially be connected to a large IoT ecosystem, many teams are not prioritizing safety and security over some of these other quality factors.

To make future safety compliance easier (and follow good architectural practices), you can separate components in time and space. For example, you can design a system where all critical operations are executed on a separate dedicated CPU, while running all non-critical operations on another – hence providing a physical separation. Another option is to employ a Separation Kernel Hypervisor and Microkernel concepts. There are other options available, but the key is to adopt key architectural approaches of separation of concerns, defense in depth, and separation for mixed criticality as early as possible. These approaches not only reduce the amount of work required to comply with safety and security standards, but they also improve quality and resilience of the application. For example, here are some ways to isolate critical code:

Separating critical functions from non-critical reduces the scope of future verification effort to demonstrate compliance.

Many edge devices in the IoT ecosystem provide critical services that may fall under future safety and security standards. Of course, trying to comply with standards requirements, without knowing whether it’s needed or not, is not a cost-effective strategy. To prepare for the future, organizations can adopt key design techniques, unit testing approaches, and static analysis tools, and collect metrics to support future needs. Software teams can adopt these approaches seamlessly into their existing processes if started earlier enough. Starting early with the right approach that can be scaled later to prevent the need for an almost Herculean effort in getting software code in compliance when it’s been developed, tested, and deployed.

"MISRA", "MISRA C" and the triangle logo are registered trademarks of The MISRA Consortium Limited. ©The MISRA Consortium Limited, 2021. All rights reserved.