We're an Embedded Award 2026 Tools nominee and would love your support! Vote for C/C++test CT >>

Jump to Section

Parasoft Blog

Explore how AI-driven testing solutions empower Java teams to build quality into every stage—from writing clean code to validating complex integrations to even optimizing manual regression testing workflows.

Jump to Section

Modern Java applications are more complex than ever. With microservices, APIs, cloud-native architectures, and rapid release cycles, development teams face immense pressure to deliver high-quality software quickly.

Testing is a critical quality gate that often becomes a bottleneck, slowing down releases and increasing risk. With the productivity gains developers are seeing with AI, this bottleneck will constrict release trains even further unless AI can revolutionize how teams test software across the entire development life cycle.

Java systems today rarely run as monoliths. Instead, they’re made up of interconnected microservices and user interfaces that continuously evolve. With all this architectural complexity and rapid development cycles, teams face some real challenges.

First, there’s the risk of bugs and security issues slipping through the cracks.

Then, scaling and maintaining automated tests, especially the tricky end-to-end ones, can quickly become a costly, time-consuming headache without the right tools. Test environment roadblocks, like lack of access to external integrations or test data, delay testing and increase project costs.

On top of that, managing thousands of tests across different layers can be overwhelming, leading to slower feedback and delays in getting changes out the door.

These challenges demand a new approach to testing. One that’s faster, smarter, and resilient enough to keep pace with modern Java development. That’s where AI-driven testing comes in, transforming bottlenecks into opportunities and enabling teams to focus on what matters most: delivering reliable software without slowing down.

The foundation of any resilient Java application lies in the quality of its code. But maintaining that quality isn’t easy, especially when development cycles are fast and codebases are large. That’s why a shift-left approach to testing, starting with static analysis, is essential.

But traditional static analysis has a reputation: it often overwhelms developers with a flood of warnings and violations, many of which aren’t resolved within the sprint. Over time, this creates a growing backlog of unresolved findings that can stall progress and introduce risk.

Remediating these issues efficiently becomes a real challenge, especially when developers are unfamiliar with the rules that triggered the violations or when they’re juggling many alerts with no clear sense of priority.

This is where AI changes the game.

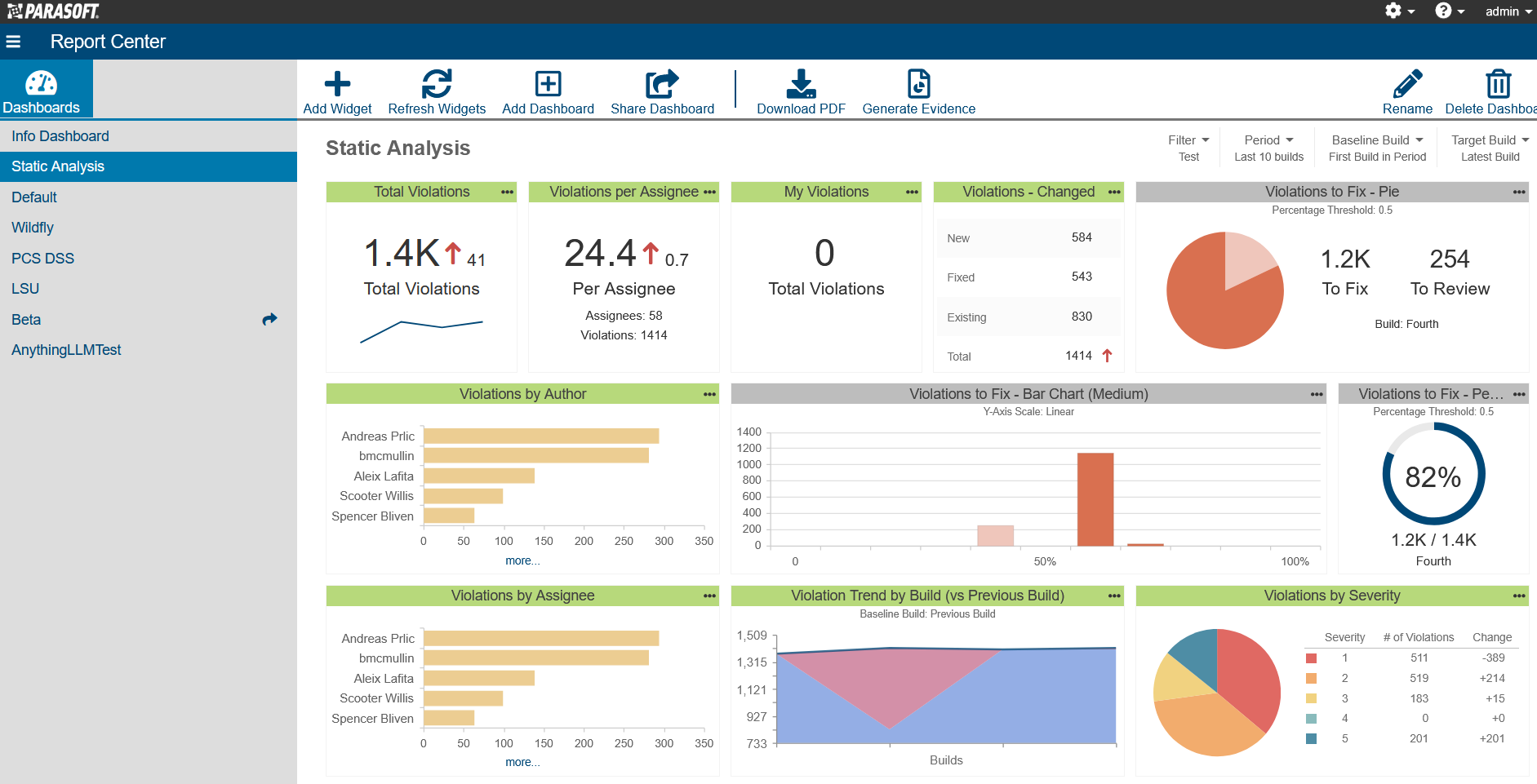

Modern solutions like Parasoft Jtest, especially when paired with Parasoft DTP for centralized reporting and analytics, leverage AI to improve the static analysis experience.

More specifically, recent AI enhancements help developers work faster and smarter by:

By empowering developers with intelligent static analysis at the point of code creation, teams can dramatically reduce technical debt, streamline compliance, and avoid costly rework down the line.

The result? Cleaner code, earlier validation, and a stronger foundation for everything that comes next in the Java development lifecycle.

Parasoft DTP dashboard displaying static analysis results.

Unit testing is essential to verify that lower-level units of code behave as expected, supports robust regression testing, and helps developers catch bugs before they snowball into bigger issues. But there’s a catch: writing and maintaining a comprehensive suite of unit tests, especially for legacy or complex code, is time-consuming and often neglected under tight deadlines.

Again, this is where AI steps in. Parasoft Jtest integrates AI directly into the developer’s IDE to transform unit testing. It enables teams to quickly generate meaningful tests for previously uncovered code, boosting coverage with minimal manual effort.

Here’s how Jtest streamlines the process from initial test creation to execution:

With Parasoft Jtest, AI becomes a strategic part of your unit testing workflow, increasing coverage, tightening feedback loops, and helping your team deliver reliable code faster. It turns unit testing from a bottleneck into a productivity boost, giving developers more time to focus on what matters most: building great software.

Codeless test automation tools have made it easier to create API and UI tests, but they still require a significant time investment in configuration and maintenance. AI-powered testing solutions reduce the manual effort needed to build and maintain automation, accelerating the entire process and helping teams keep pace with rapid development cycles.

Automated API testing offers a more scalable, maintainable, way to validate application behavior, especially when compared to brittle and slow web UI tests. But many teams still struggle to adopt and scale API testing due to the technical expertise required to build scenarios from scratch, configure assertions, manage test data, and handle complex integrations.

Proprietary AI technology in SOAtest simplifies test creation by analyzing recorded traffic—from either manual UI workflows or API interactions captured through a proxy—and automatically generating reusable test scenarios. These tests are enriched with intelligent assertions, parameterized inputs, and customizable validations, accelerating test creation and increasing coverage with minimal manual overhead.

Learn more about the SOAtest Smart API Test Generator.

Agentic AI, delivered through an embedded AI Assistant, takes it a step further. Testers can describe their testing goals in natural language, and the assistant responds with auto-generated test cases based on service definitions like OpenAPI or Swagger. It can also generate test data on demand and automatically parameterize the generated test scenario, making it easy to build data-driven, effective test suites.

By reducing the technical barriers to entry and eliminating much of the manual effort traditionally involved in API testing, SOAtest’s AI capabilities enable broader adoption across QA teams and promote a shift toward validating more end-to-end functionality with resilient and scalable API testing instead of an overuse of fragile and costly UI testing.

See how agentic AI is changing API testing.

While API testing offers greater stability and scalability, web UI testing remains a vital part of ensuring application quality. It validates the user experience, from visual layout to interactive workflows, and catches issues that API tests can’t detect.

But UI tests are inherently more fragile than other types of automated tests. Timing issues, such as improper wait conditions or slow-loading elements, are frequent culprits. Even minor changes like updates to the HTML DOM or dynamic content can cause tests to fail, even if the underlying functionality is working correctly. This leads to false positives, unnecessary debugging, and constant maintenance that slows teams down.

Parasoft Selenic brings intelligence to Selenium-based UI testing in Java by applying proprietary machine learning to stabilize execution. During test runs, it detects common failure points such as element locator mismatches or timing-related issues and applies self-healing to keep tests running smoothly. When a failure occurs, Selenic automatically identifies and uses alternative locators, and adapts to timing issues by intelligently adjusting wait conditions on the fly.

After the run, developers are presented with recommendations to permanently update the test code based on successful healing. With a single click, they can accept those changes and avoid similar failures in future runs.

By reducing test flakiness and simplifying maintenance, AI ensures your web UI tests remain a reliable part of your quality strategy, even as your application evolves. Try it yourself—download the free Selenic Desktop Edition and put AI-driven test healing into action.

We previously introduced test impact analysis (TIA) in the context of unit testing, where it accelerates feedback on pull requests and code merges. But its value extends across the SDLC, playing a key role in scaling functional and UI testing while maintaining fast, focused, feedback loops.

In fast-paced DevOps pipelines, running full regression suites for every change is sensible, but it quickly becomes costly. It slows CI/CD cycles, consumes resources, and delays validation, often for changes that affect only a small portion of the application.

AI-powered Test Impact Analysis eliminates the guesswork around what needs to be tested. Parasoft TIA uses proprietary AI to automatically correlate recent code changes with only the relevant test cases whether they’re automated API or UI tests, or even manual regression tests.

Parasoft testing tools like Parasoft SOAtest and Selenic include TIA support out-of-the-box. However, Parasoft TIA can be used with any testing tool thanks to its open REST API design. Testing both Java and .NET applications is supported, including microservices architectures where the system under test is distributed across many independent components. With Parasoft TIA, development and QA teams can optimize test execution across all layers of the system.

Here’s how AI-powered TIA helps scale your testing without slowing you down:

By bringing AI into the equation, Test Impact Analysis evolves from a performance optimization into a strategic advantage, accelerating delivery while preserving the high quality that modern Java applications demand.

Manual regression testing remains a persistent aspect of software projects. Many teams face a reality that not all regression testing can, or in some cases should, be automated, and this results in a bottleneck to releasing software quickly.

As applications quickly evolve, testers often struggle to complete manual regression testing within a timeframe that meets the pace of development. Without clear insight into what’s changed, teams struggle to decide which tests they should run, resulting in either redundant work with delayed feedback, or missed defects that escape to production.

Parasoft’s AI-powered TIA brings clarity to this process by highlighting exactly which manual regression tests are impacted by changes in the application. Instead of guessing what to retest, or retesting everything, QA teams can concentrate on the tests that truly matter, guided by automated analysis of code changes between builds.

This targeted, change-aware approach makes manual regression testing more strategic and less time-consuming. It helps testers validate critical functionality without missing key risks or wasting time on unaffected features.

AI isn’t just a buzzword, it’s a practical, powerful tool that’s changing how Java teams test and deliver software. From smarter static analysis and AI-assisted unit testing to scaling automation and accelerating CI/CD pipelines, AI helps teams build resilience into their testing strategy, reduce risk, and deliver faster.

Whether you’re a developer, tester, or QA lead, adopting AI-powered testing tools means less manual effort, smarter workflows, and more confidence in every release.

Ready to see AI transform your Java testing? Talk to a Parasoft expert for a custom demo or evaluation.