Take a faster, smarter path to AI-driven C/C++ test automation. Discover how >>

Why Manual Testing Still Matters in an AI World & How to Modernize It

QA teams face unwavering pressure. See how test impact analysis brings data-driven precision to your QA strategy using AI and automation to pinpoint exactly what needs testing and transforming your workflow into a targeted, high-impact practice.

Jump to Section

QA teams face unwavering pressure. See how test impact analysis brings data-driven precision to your QA strategy using AI and automation to pinpoint exactly what needs testing and transforming your workflow into a targeted, high-impact practice.

In our DevOps-driven world of CI/CD pipelines and rapid deployments, it’s easy to assume that automation and now AI have made manual testing obsolete. But the reality is different.

Manual testers still play a critical role in quality assurance, providing the kind of human insight and context-aware validation that automated tests can’t replicate.

The challenge? Keeping manual testing relevant and efficient in an environment that demands speed, precision, and constant iteration. Let’s explore why manual testing still matters and how to modernize it.

See how your team can reduce manual regression time.

Despite the growing emphasis on automation and AI, most QA teams still rely on a hybrid testing strategy that combines both automated and manual testing. And for good reason.

By hybrid testing strategy, we mean a balanced approach: automating repetitive, high-volume, or regression tests while reserving manual testing for new features, user-experience, usability, edge cases, or regression testing when automation is impractical.

But to stay effective in today’s software development life cycle (SDLC), manual testing needs to evolve. That means shifting from checklist-based testing to data-backed and insight-driven validation.

Modern development moves fast. Code changes are frequent. Builds deploy continuously. And release cycles are measured in days, not weeks.

That pace puts serious pressure on teams that still rely heavily on manual regression testing.

Without clear and immediate visibility into what’s changed or where to focus, manual testers are left with two choices. They may re-execute broad regression suites to play it safe, or worse, miss validating areas affected by recent updates. This leads to:

To keep pace with modern development, manual regression testing needs to be more focused, efficient, and aligned with development changes.

The rapid adoption of AI-powered development tools has accelerated software delivery. Code can now be generated or modified automatically, sometimes across multiple modules, in a matter of minutes.

While this boosts development velocity, it also produces frequent changes that must be validated carefully, as AI-generated code is often created with limited context and can contain flaws, downstream defects, or even security vulnerabilities.

This combination of speed and risk puts QA teams under increasing pressure. The burden is even heavier for teams that still rely heavily on manual regression testing.

As a result, manual testers are now tasked with validating a growing number of changes, ensuring that even the fastest-moving AI-assisted development doesn’t compromise quality. Focused, data-driven, and strategic testing has never been more critical.

QA teams must prioritize their activities through data-backed analysis designed to limit the scope of their testing, to focus on the areas of the highest risks associated with code changes.

That starts with rethinking the way manual regression testing is approached. Instead of manually testing everything "just in case," testers should be guided by coverage data.

By making manual regression testing strategically focused, teams can boost quality without slowing down development.

This is where test impact analysis (TIA) and its automated analysis of code coverage come into play, giving manual testers the clarity they need to act with purpose, reduce redundancy, and ensure meaningful test coverage.

"With test impact analysis, our manual testers know exactly which areas to focus on. It’s cut our regression cycles down dramatically and reduced our testing effort.

—Director of Quality Assurance

Explore our results: Discover how WoodmenLife achieved 212% faster regression testing »

Test impact analysis uses manual testing code coverage to automatically prioritize manual regression efforts. Instead of QA teams manually trying to identify what to test, they can use TIA to automatically get a list of which tests are impacted by code changes, so teams can focus on high-risk areas and reduce wasted effort.

TIA answers the most critical questions in any test cycle.

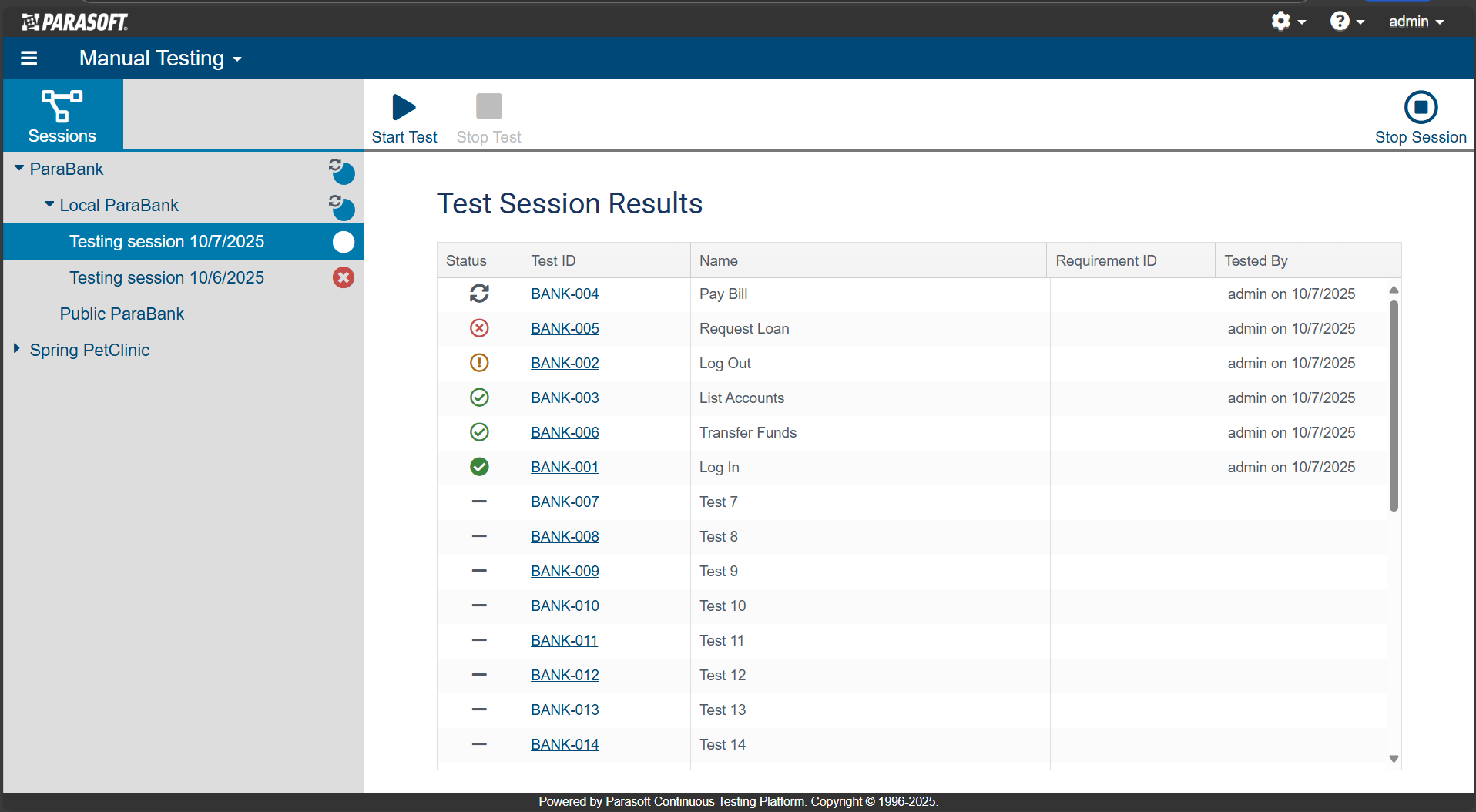

TIA shows testers exactly which manual tests need to be re-executed for each build, eliminating unnecessary testing while ensuring thorough coverage.

TIA enables the execution of manual regression testing continuously. As the lists of what tests to run is updated automatically in real-time for each new build, there’s no waiting around for code freezes or end-of-sprint windows to start regression testing.

As a part of test impact analysis, manual code coverage analysis tracks what parts of the application were exercised during test sessions, giving teams:

Explore our solution: Code coverage tools & solutions to make QA faster »

The result: Manual testing becomes precise and risk-based, helping teams focus on high-impact areas, reduce wasted effort, and deliver quality faster.

Watch our short demo video to learn more.

Explore our solution: Code coverage tools & solutions to make QA faster »

The demands on QA teams are growing. In our new era of AI and advanced automation, if your team still relies heavily on manual testing, the pressure to keep pace can be overwhelming.

With test impact analysis, you can transform manual testing from a bottleneck into a focused and efficient practice. By automatically analyzing coverage and changes, TIA ensures you don’t have to sacrifice quality for speed.

Ready to reduce your test execution time by 90%?