Take a faster, smarter path to AI-driven C/C++ test automation. Discover how >>

How AI Increases Confidence for Manual Testers in a Changing Codebase

Manual testing remains essential to delivering high-quality software. The challenge is knowing where to focus testing efforts. Read on to learn how test impact analysis helps manual testers work with greater precision more efficiently.

Manual testing remains essential to delivering high-quality software. The challenge is knowing where to focus testing efforts. Read on to learn how test impact analysis helps manual testers work with greater precision more efficiently.

In fast-moving development environments, manual testers still play a vital role in ensuring quality. They bring a human perspective to testing—validating user experiences, business rules, and workflows that automation might miss. But one major challenge continues to weigh them down: figuring out what to test after every change.

Without clear insight into the scope and impact of code changes, manual testers are left guessing. They base their efforts on evolving product requirements—often translated into user stories, Jira tickets, or developer notes—to decide where to focus their limited time. That guesswork can lead to overtesting, missed regressions, or inefficient testing cycles.

Fortunately, it doesn’t have to be this way. AI-enhanced test impact analysis and manual code coverage are powerful tools that eliminate uncertainty and help manual testers focus where it matters most.

In Agile and DevOps pipelines, change is constant. Code is updated constantly, with new builds often pushed daily, sometimes hourly. Manual testers are expected to jump in quickly and validate the latest builds. But when they’re handed a new release, the key questions are always the same: What changed? And what do I need to test?

To test efficiently and confidently, manual testers need visibility into what areas of the application are at risk from recent updates. This is where AI and test impact analysis come in.

Test impact analysis, or TIA, is an AI-enhanced method for making smarter testing decisions by identifying the connection between changes in the code and the tests that validate those changes.

Rather than having to re-execute the entire regression suite, it works by:

In other words, it helps testers focus their time and energy on the areas that actually need attention.

This becomes especially valuable in fast-paced development cycles, where code is constantly evolving and testing windows are tight. QA teams may know which requirements are changing, but it’s not always clear which tests should be re-executed—especially when updates can unintentionally affect other parts of the application. It shows where the changes happened, and which tests are most likely to catch issues introduced by those changes.

For manual testers, this insight is a game-changer. Rather than retesting large swaths of functionality just to be safe, they can approach each test cycle with greater focus. They know which features have changed, which workflows are impacted, and where their efforts will have the most value. It’s a shift from wide, unfocused regression toward a more targeted and efficient testing approach.

This kind of clarity also helps protect against tester fatigue. With constant pressure to retest everything—or test without knowing exactly what’s changed—manual testing gets exhausting. TIA breaks that cycle. It removes guesswork and repetition, allowing testers to focus on meaningful work rather than low-risk, redundant tasks. With clearer priorities and fewer unnecessary tests, testers can maintain a sustainable pace without sacrificing quality.

Ultimately, test impact analysis turns manual testing from a guessing game into a targeted, data-informed process. It brings confidence to every test session, ensuring that testers aren’t just working harder—they’re working smarter and staying engaged.

In fast-paced development environments, strong collaboration between development and QA is essential—but not always easy. Communication gaps, unclear priorities, and shifting requirements can all get in the way of efficient teamwork. To truly move fast without breaking things, teams need more than visibility—they need alignment.

With TIA, development and QA align more naturally. As developers make changes, TIA identifies exactly which tests, manual or automated, are affected. Instead of QA having to ask what changed, the data provides a direct answer. Manual testers can immediately see which of their test cases are impacted and focus their energy on validating just those areas.

This eliminates ambiguity, cuts down on misunderstandings, and removes the pressure to retest everything "just in case." It also empowers testers to have more informed conversations with developers, backed by test impact analysis. The result is a faster, more efficient cycle where everyone is focused on what matters most.

Code coverage collected during the execution of manual tests adds another layer of value to this collaboration. During manual test sessions, coverage agents deployed across the application architecture collect code execution data. This runtime coverage data is then used by test impact analysis to correlate code changes with relevant tests. But it doesn’t stop there—coverage collected during manual testing can also be merged with code coverage data from automated unit and functional testing.

Development teams integrating these insights gain a more complete picture of overall test coverage—and manual testers gain new visibility into how their work contributes to shared development goals, such as meeting code coverage thresholds.

By integrating TIA and manual code coverage into the quality process, teams aren’t just improving test strategy—they’re building a more collaborative culture. Developers and testers move in sync, speak the same language, and share a common goal: delivering high-quality software.

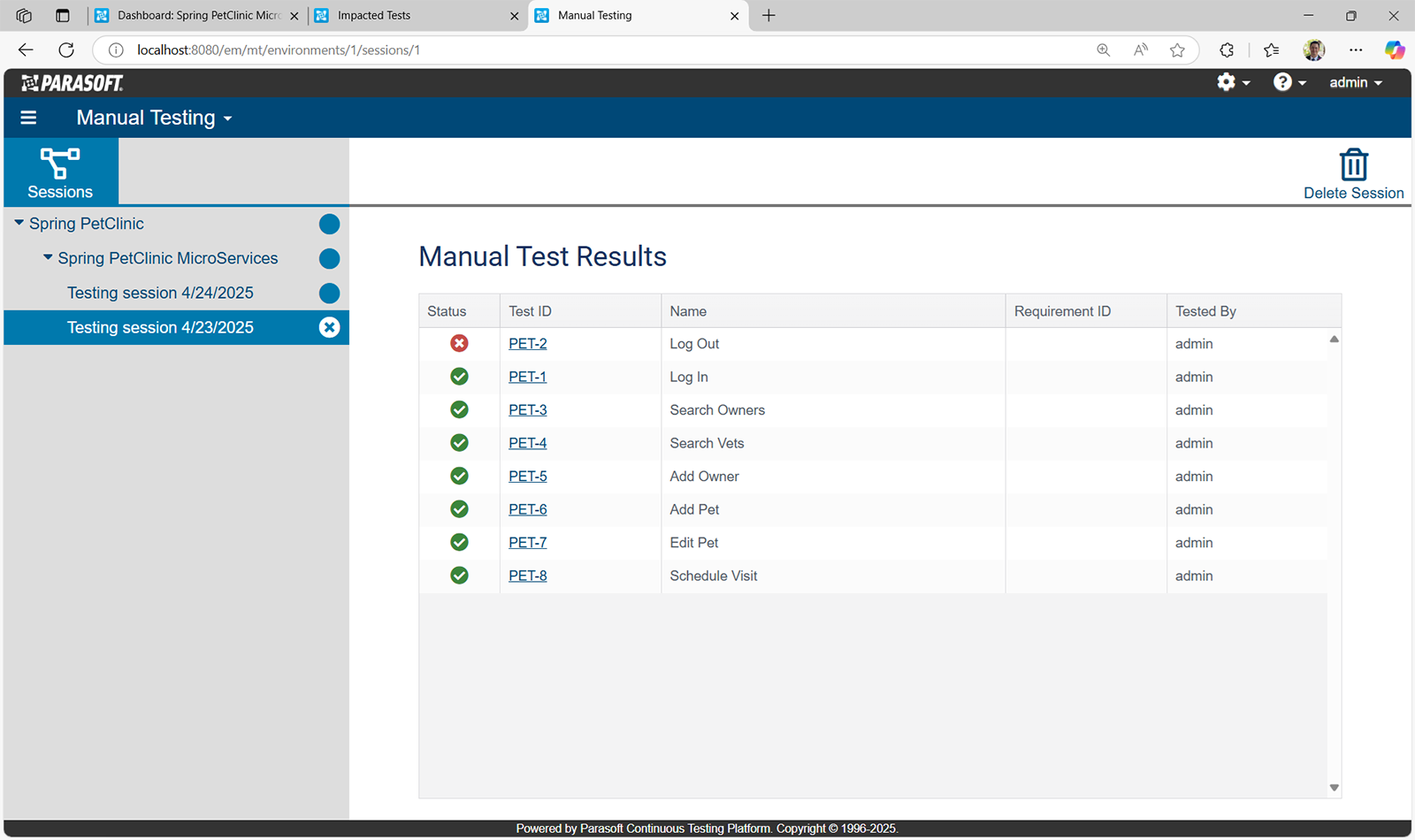

Parasoft CTP displays manual test execution results, helping teams track outcomes and optimize testing efforts.

Parasoft brings clarity to manual testing workflows by enabling both test impact analysis and manual code coverage in one integrated platform.

Here’s what a modern workflow looks like when manual testers use Parasoft’s platform.

With Parasoft, manual testers no longer have to choose between speed and thoroughness. They can do both—with ease and confidence.

Manual testing doesn’t have to rely solely on requirements mapping. With test impact analysis and manual code coverage, testers can work with greater precision and contribute measurable value to the development process.

In a world of constant change, the ability to test the right things at the right time isn’t just helpful—it’s essential. Parasoft gives manual testers the visibility, insight, and confidence they need to test smarter, prioritize effectively, and drive quality forward with purpose.

Watch our webinar: How to Focus Manual Testing Where It Counts