Take a faster, smarter path to AI-driven C/C++ test automation. Discover how >>

Leveraging Embedded AI for Automotive Software Testing

Are you considering the next steps in optimizing your embedded testing strategy for automotive software? This post outlines practical integration approaches that can improve speed, accuracy, and consistency.

Jump to Section

Are you considering the next steps in optimizing your embedded testing strategy for automotive software? This post outlines practical integration approaches that can improve speed, accuracy, and consistency.

Artificial intelligence is steadily becoming part of the embedded systems that power today’s vehicles. Automotive teams are moving beyond experiments with large language models (LLMs) and exploring how embedded AI can support critical tasks like software testing, especially in environments where memory, compute, and energy are limited.

Parasoft’s AI-enabled solutions demonstrate significant productivity gains for development teams. For example, customers are reporting that embedded AI techniques are leading to substantial improvements, increasing developer productivity by an estimated four to ten hours per week per developer. For a team of 100 developers, this translates to over 20,000 hours annually, allowing teams to manage increasingly complex systems more efficiently while staying aligned with performance and safety requirements.

At the same time, many engineers are navigating a mix of enthusiasm and uncertainty as they evaluate how AI fits into their existing workflows. Let’s explore practical integration approaches.

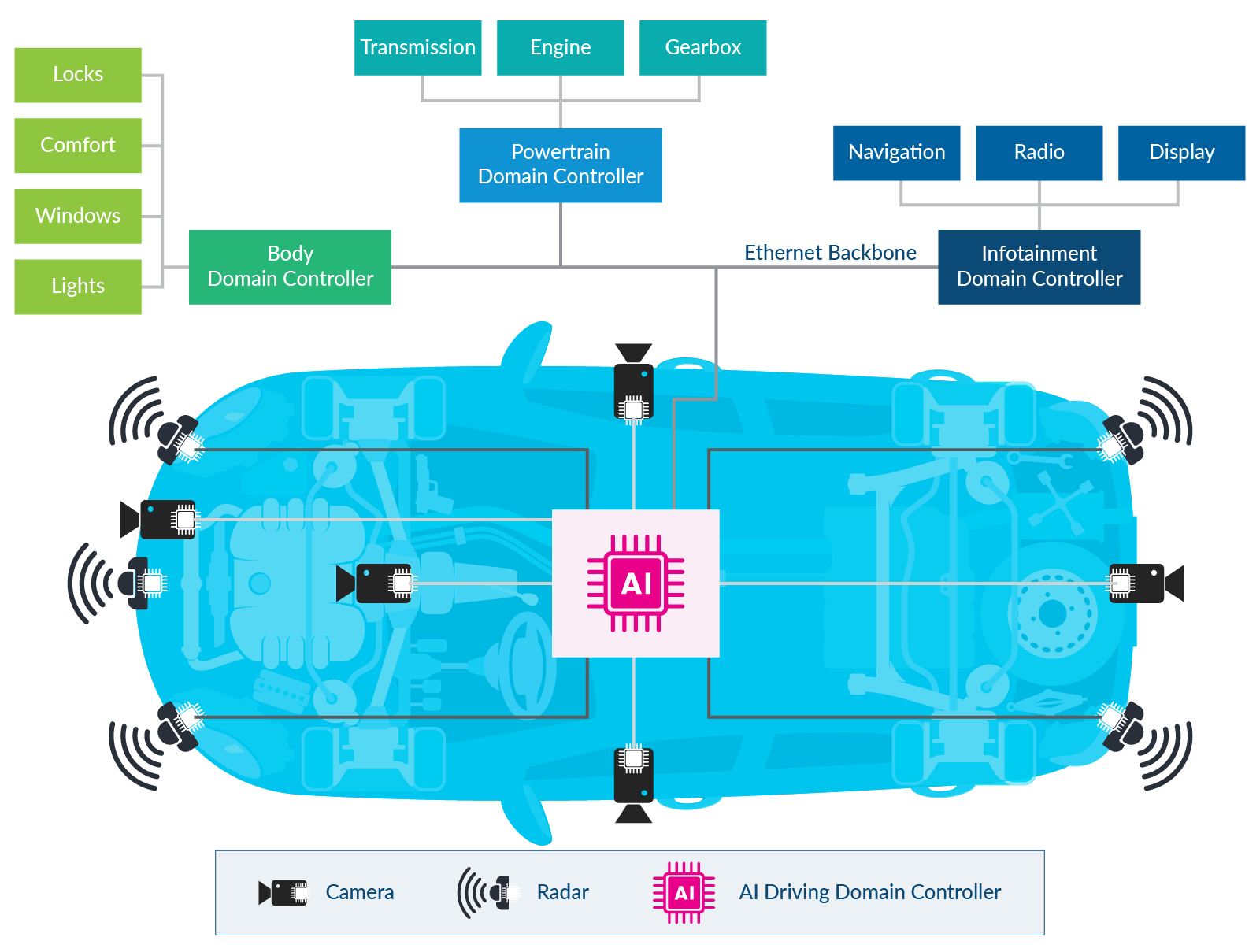

Modern vehicles rely on embedded AI to support real-time decision-making directly on hardware. These systems adapt to changing driving conditions, optimize performance, and enhance safety protocols without relying on continuous access to the cloud.

Self-driving tech is transforming mobility making it smarter, safer, and more efficient than ever.

Still, many automotive engineering teams often face the challenge of aligning these capabilities with strict constraints. Limited memory, power, and stringent safety standards, such as ISO 26262 and ISO 8800 for functional safety, AUTOSAR for software architecture, and Automotive Safety Integrity Levels (ASIL) requirements, can all make AI integration feel out of reach. That’s where embedded machine learning offers a more efficient path forward.

For example, predictive maintenance powered by embedded AI can help your vehicle maintenance team identify signs of system degradation early, thereby reducing the risk of failures often experienced in critical components.

Additionally, anomaly detection can help spot unusual patterns in sensor data, which might indicate software bugs or hardware faults. These capabilities strengthen in-vehicle safety protocols and can add an extra layer of intelligence to how systems respond under pressure.

The growing interest in this area is reflected in common user searches like "what is embedded AI?" Many teams are actively exploring how to make AI work within their embedded constraints, often with limited internal guidance or precedent. So, if you’re in that position, you’re not the only one.

To see how others are navigating this space, take a look at Parasoft’s insights into embedded systems. It covers how teams are using AI to improve testing and development outcomes.

Implementing AI and machine learning solutions in embedded systems begins with selecting the right use case. Whether it’s driver monitoring, component diagnostics, or predictive control, each application has different demands for resource allocation, sensor data processing, and real-time analysis. Keeping the model small and efficient is key.

Here’s a brief step-by-step guide to go about it:

Parasoft often sees teams struggle with calibrating hardware requirements or estimating compute power for AI models. There’s no one-size-fits-all formula for these common sticking points. What helps is performing trade studies and having clear benchmarks to evaluate what "good enough" looks like. From power consumption to inference time, defining success early gives teams a practical roadmap.

The embedded AI market is experiencing rapid growth, with projections placing its value at over $10 billion by the end of 2025, for example, Mordor Intelligence and Grand View Research. This indicates a significant and accelerating incorporation of AI into embedded systems, driven by advancements in various sectors, particularly automotive, where the pressure to deliver lean, reliable models continues to grow.

Optimizing your AI algorithms for embedded systems is essential for keeping pace with real-time demands. It’s understandable if this feels overwhelming. Many teams are unsure how to meet strict timing requirements while staying within memory and processing limits. You’re not alone in wondering how to build something smart that still runs lean.

A good way forward is to break the process into smaller, manageable steps like what we have below:

Taking these steps early on reduces the friction that could appear later in testing or deployment.

For comprehensive guidance on testing embedded systems, explore our guide on automated testing in embedded environments. It offers clear, practical advice on how to bring AI and testing together in a streamlined workflow.

To embed AI in automotive software often means working around what already exists. In many cases, this means working within the boundaries of existing control units, powertrain components, or long-established software routines.

The essence of deploying AI on embedded legacy platforms isn’t simply about running an AI model. It’s about radically transforming and optimizing that model—through quantization, pruning, efficient architectures—and potentially leveraging specialized hardware to fit within the embedded constraints while guaranteeing the rock-solid stability and compliance required, especially in safety-critical applications. Integrating AI onto legacy platforms without these techniques is usually impossible, and doing it poorly risks disrupting the core, critical functions of the system.

We understand that no one wants to break something that’s already safe and certified. But it’s possible to bring in AI thoughtfully, step by step.

For an in-depth exploration of the testing practices that help ensure stability and compliance when introducing AI features to an automotive system, explore our guide to cracking the code for safe AI in embedded systems.

There are several security and privacy concerns to factor in when deploying AI in embedded systems. First off, the systems are designed to process sensitive vehicle and user data. A single vulnerability can impact both safety and trust. Hence, automotive AI must follow strict security protocols from design to deployment.

The key conventions include:

These conventions are part of broader cybersecurity regulatory requirements like UNECE WP.29 R155 and standards like ISO/SAE 21434 for road vehicle cybersecurity. They work alongside functional safety guidelines such as ISO 26262.

While all these privacy and security points appear straightforward, experience has taught us that they can be overwhelming. Therefore, it’s normal to feel uncertain about AI security as most organizations are still learning the best practices.

You may find it interesting to check out security testing capabilities available through tools for testing C/C++ in embedded applications. These tools help validate security and safety and satisfy compliance requirements.

Integrating AI into safety-critical embedded systems like automotive platforms introduces unique probabilistic risks, algorithmic uncertainty, data dependencies, and edge-case failures, demanding tailored risk management. While core principles of redundancy, transparency, verification, and adaptive safeguards remain vital, establishing quantifiable trust signals and adhering to industry benchmarks becomes critical.

Organizations must define explicit risk acceptance criteria (like tolerable AI error rates) aligned with system safety goals (ASILs), just as they bound hardware failure thresholds. Trust signals— such as ISO 26262 certification, SOTIF (ISO 21448) validation reports, or cybersecurity compliance (ISO/SAE 21434 / UNECE R155)—provide auditable proof these risk thresholds have been met.

Meanwhile, industry benchmarks, such as object detection scores on nuScenes and robustness metrics from adversarial suites, operationalize "acceptable risk" into measurable targets, validating that AI performance stays within safety boundaries under diverse conditions.

Evolving regulations reinforce this framework. Standards like ISO 21448 (SOTIF) mandate validation of AI performance limits against real-world benchmarks, while UNECE R156 requires proof that OTA updates maintain benchmarked safety/security levels.

Effective AI risk management thus combines traditional safety engineering with AI-specific methods (explainability tools, dynamic monitoring) and leverages trust signals as compliance artifacts and benchmarks as risk quantification tools. Crucially, it addresses ethical dimensions (bias, fairness) through benchmarks like NIST’s AI fairness metrics and stakeholder validation.

Ultimately, linking risk thresholds to standardized benchmarks and generating verifiable trust signals ensures AI enhances capability without compromising safety in resource-constrained environments, turning abstract risk into demonstrable safety.

Tools like Parasoft C/C++test play a critical role in this ecosystem by automating static and dynamic verification of safety-critical C/C++ components, including AI/ML runtimes and legacy integration layers. They generate auditable trust signals (MISRA, ASIL evidence) and ensure foundational code integrity aligns with risk thresholds.

The upfront cost of embedded AI in automotive software can be difficult to justify without a clear link to ROI. That pressure is familiar to most engineering and QA teams looking to modernize their testing pipelines, especially when full resourcing depends on early results.

Deploying machine learning on embedded systems involves significant development costs. These include domain-specific data curation, model optimization (such as quantization and pruning for hardware constraints), and rigorous safety and compliance validation aligned with ISO 26262 and ISO 21448.

Deployment expenses cover hardware tradeoffs, such as reusing legacy silicon versus adding neural processing unit (NPU) accelerators, and integration into platforms like AUTOSAR. Operational costs arise from power management, as well as maintaining over-the-air (OTA) update pipelines compliant with standards like UNECE R156.

Therefore, the ROI justification must be multi-faceted. Edge Lifecycle Management (ELM) handles the complete lifecycle of software, data, and AI models deployed at the edge, such as in vehicles, including deployment, updates, monitoring, analytics, and decommissioning.

By managing this lifecycle efficiently at the edge, ELM achieves the following:

Just as importantly, it helps avoid major expenses. Predictive analytics can reduce warranty costs by 8 to 12%, while strong compliance practices help prevent costly recalls.

Strategically, organizations should prioritize high-impact use cases, such as safety functions and revenue-generating features, over niche applications.

While the upfront investments in optimization and validation are substantial, they lay the groundwork for scalable ELM deployment. Over time, this transforms costs into long-term efficiency gains, improved market access, and reduced liability.

Access a free ROI assessment template from Parasoft to evaluate the value of implementing static analysis, unit testing, and other automated testing solutions. Compare projected savings with upfront investments and build a compelling internal business case.

Performance in embedded AI/ML hinges on deterministic execution within resource limits. Key factors include:

Vehicle powered by sensors, driven by AI.

Testing must validate worst-case execution time (WCET), memory leaks, and thermal stability under stress scenarios like sensory overload or adversarial inputs. For legacy systems, performance testing also verifies that AI workloads do not starve critical control tasks of CPU cycles.

Scalability addresses how AI/ML systems adapt to evolving demands. This includes vertical scaling (optimizing models for new hardware accelerators) and horizontal scaling (distributing inference across ECUs).

Testing must ensure over-the-air (OTA) model updates—mandated by standards like UNECE R156—do not degrade real-time performance or compromise safety. Scalability testing also validates edge-to-cloud hybrid workflows, where preprocessing on embedded devices reduces cloud dependency while maintaining accuracy.

Critical testing focus areas include:

With standards-driven requirements, ISO 26262 mandates performance predictability for safety functions, while ISO 21448 (SOTIF) requires testing AI performance limits in edge cases. Failing to scale efficiently risks violating these frameworks, such as an autonomous system exceeding latency budgets during pedestrian detection.

If you’re rethinking your AI testing strategy, explore Parasoft’s automated testing best practices for practical insights.

Embedded AI in automotive systems needs more than functional code. It needs a testing process that accounts for complexity, hardware constraints, and unpredictable road conditions, all of which help to ensure the system behaves as intended, every time.

Below are standard test flows for automotive AI software projects.

Unit testing is essential for verifying AI components, not by testing the ML model itself, but by rigorously validating the runtime code, integration layers, and safety mechanisms that enable AI functionality.

Integration testing for automotive AI validates how individual components, like perception, planning, control, interact within the broader vehicle ecosystem, exposing emergent risks that unit testing cannot detect.

It verifies interfaces between AI runtimes (C/C++/Python), legacy AUTOSAR stacks, and hardware sensors/actuators under real-world scenarios, such as sensor conflicts during heavy rain or timing delays in emergency braking chains.

Crucially, it confirms that safety mechanisms (such as fallbacks for low-confidence AI outputs, watchdog timers) function as designed across subsystem boundaries, preventing cascading failures. Integration testing also benchmarks resource usage (CPU, memory, bandwidth) when AI tasks scale across ECUs, ensuring compliance with real-time deadlines (ISO 26262) and SOTIF (ISO 21448) edge-case requirements. For AI-dependent features like autonomous parking or adaptive cruise control, this process bridges the gap between algorithmic performance and vehicle-level safety, turning abstract risk thresholds into verifiable system behavior.

System-level testing for automotive AI validates end-to-end functionality against real-world operational scenarios, ensuring the complete vehicle meets safety, regulatory, and user expectations. The goal is to recreate the full driving stack, under realistic load, with complex variables introduced through simulation or pre-recorded driving data.

It subjects integrated AI features—like autonomous driving stacks—to high-fidelity simulations and physical trials, testing emergency maneuvers in adverse weather, complex urban intersections, or sensor failure modes, to verify compliance with standards like ISO 26262, ISO 21448 (SOTIF) for handling edge cases, and UNECE R157 for automated lane-keeping.

Crucially, it confirms that AI-driven decisions, like collision avoidance, align with vehicle dynamics and ethical priorities while maintaining graceful degradation when exceeding operational limits. System testing also benchmarks performance against industry metrics, for example, Euro NCAP safety scores, providing evidence that AI risks remain within accepted thresholds across the vehicle’s lifecycle.

As teams advance through these stages, several parameters also need close attention:

Staying updated on these testing frameworks can feel like a full-time task. Between shifting standards and evolving toolchains, teams can find themselves under pressure to manage it all manually. But adopting purpose-built tooling can help automate key steps and reduce friction.

For teams looking to improve their AI and ML testing workflow, Parasoft offers a free evaluation that supports embedded environments. It’s designed to help you move from manual processes to a structured, scalable test strategy.

Supporting embedded AI in automotive systems demands tools that transcend raw processing power. Engineering teams need integrated frameworks capable of navigating rapid change, stringent safety requirements, and unpredictable real-world conditions, while filtering signal from noise in a crowded toolchain landscape.

Essential pillars include:

The integration challenge is relentless: new AI stacks must coexist with certified legacy systems (AUTOSAR), adhere to evolving regulations (UNECE R156), and deliver deterministic performance. Tools that unify development, not fragment it, are critical to maintaining focus amid complexity.

For strategic implementation, prioritize frameworks that:

Investing in such ecosystems doesn’t just stabilize today’s workloads, it builds a foundation for certified, scalable AI deployment.

When adopting embedded AI in automotive systems, a clear roadmap will move your team forward with confidence and avoid costly misalignment. For automotive teams to effectively leverage AI, a structured roadmap ensures reliability, safety, and compliance within stringent vehicle environment constraints.

By following these strategic steps, automotive teams can navigate the complexities of AI integration, unlocking new levels of vehicle intelligence, safety, and performance.

Explore our roadmap for safe and scalable automotive AI, featuring a detailed, step-by-step guide.

Embedded AI is transforming automotive software development, but success depends on more than innovation—it requires disciplined engineering. From managing hardware constraints to ensuring compliance with functional safety and cybersecurity standards, the road to scalable AI integration is complex but achievable. By embracing structured testing practices, optimizing for performance and reliability, and leveraging proven tools, engineering teams can shift from uncertainty to confidence.

You don’t need to have all the answers today. But taking control of your test strategy sets everything in motion.

See how automated testing supports embedded AI that’s built to last.